Thangs announced a new AI-powered search function that could make finding 3D models incredibly simple.

There are several major 3D model repositories on the Internet, and they are so large that no doubt they include the 3D model you’re seeking. Thingiverse alone is currently approaching six million 3D models, and there are other repositories with similarly growing collections.

The problem is that as these repos grow, it is increasingly difficult to find what you want.

Typically search is done by keyword. The requestor types in a word or two, and the repository then searches descriptions, titles, tags and other textual elements in a vain attempt to identify appropriate items.

This, of course, fails miserably on large repos such as Thingiverse.

I often do what I call the “Vase Test”. This involves heading over to Thingiverse and seeing how many responses one gets from specifying “Vase” in a search. Today’s result is quite telling.

Thingiverse responds with only 10,000 entries, and then it stops! Basically they’ve given up on finding Vases for me, and demonstrates how broken it is to use textual search for 3D models. Previously each time I performed the Vase Test on Thingiverse I would get higher and higher results, far larger than 10,000. Now we’ll never know how many vases are on Thingiverse.

A few repos have developed “3D search”. This involves loading up a 3D model and the search attempts to find items with similar geometry.

Unfortunately, that doesn’t really work because very often you don’t have a similar 3D model: you want something new. Even worse, novices to 3D printing won’t have a chance of using 3D search because they often are unable to attempt designing a rough replica of their desired object.

Thangs has just introduced a new type of search that could resolve these issues: 2D search.

The concept is to upload a picture of what you are looking for, and then Thangs will attempt to find 3D objects that have similar geometry.

How could this work? It’s through the magic of AI.

We’re all familiar with the concept of text prediction: this happens when typing in a search box and Google attempts to fill in the remainder of the search query.

That kind of approach has been scaled up in recent releases of AI software. For example, you can type in a hypothetical caption for an image, and the AI will provide an image to match. You can type in a question and AI will provide an answer.

Another form of this recently developed is to provide a 2D image and the AI provides a corresponding 3D model. Companies like LumaLabs have leveraged this concept to create advanced 3D scanning systems using only a series of images. The company then created a “text to 3D” service.

This sort of approach is being used by Thangs to implement their new 2D search.

It’s likely they are using AI to convert the image into a rough 3D model approximation, and then using that to do the actual search.

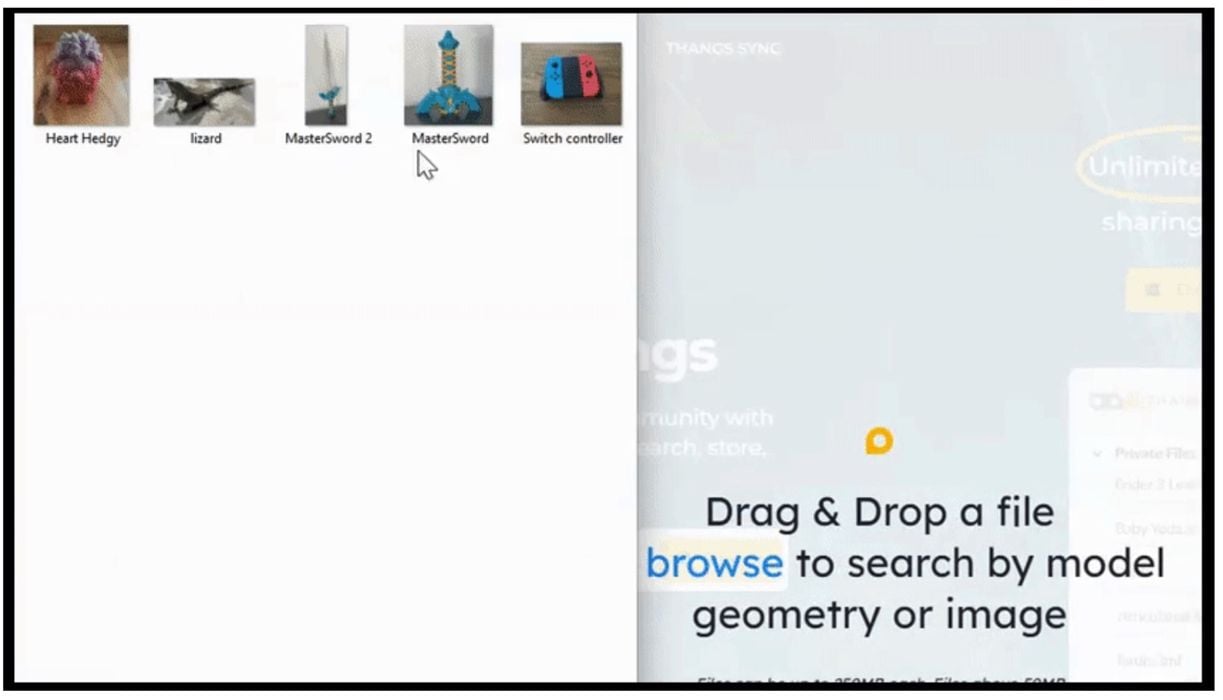

As shown in the image at top, Thangs now allows you to upload a 2D image of, well, anything, and it then attempts to find it in their vast collection.

I haven’t tried this yet, but it may even be possible to draw a rough shape and then upload its image for a search.

This is an incredibly simple way to search for 3D models that, hopefully, will become popular among 3D model repositories.

This could be the only way to properly search large collections, and that certainly would be a boon to the industry as a whole: no more giving up on search!