NVIDIA announced a new 3D generative AI system, LATTE3D.

LATTE3D stands for “Large-scale Amortized Text-To-Enhanced3D Synthesis”, a name dangerously close to becoming a backronym. It is simply an advanced text-to-3D software tool.

We’ve been very interested in text-to-3D tools for some time because we see it as a possible way to overcome the CAD barrier that prevents many people and companies from fully exercising the capabilities of 3D printing. You can’t print without a 3D model, and making 3D models is very challenging: significant skills and expensive software are usually required.

There have been several attempts as producing text-to-3D tools in recent months, but those that I’ve tested have had limited results. Typically the generated 3D models are rough, or constrained within a specific shape domain.

Don’t get me wrong here: just because the results are not so great doesn’t mean this is a momentous achievement by these software companies. Generating anything even slightly resembling a 3D model is quite unbelievable. I’m remembering the famous cartoon of people amazed that a dog could play chess, but someone remarked “but he only wins once in a while.” It’s still amazing.

Has NVIDIA taken this technology up a notch with LATTE3D? They explain what they’re solving:

”Amortized methods like ATT3D optimize multiple prompts simultaneously to improve efficiency, enabling fast text-to-3D synthesis. However, they cannot capture high-frequency geometry and texture details and struggle to scale to large prompt sets, so they generalizes poorly. We introduce LATTE3D, addressing these limitations to achieve fast, high-quality generation on a significantly larger prompt set.”

They say LATTE3D can produce fully textured 3D models in a single pass, and generates objects in only 400ms — less than a second!

Their internal processing approach is quite interesting. They use two networks, one for geometry, and one for texture. In the first sequence the two have the same weights. But in the second sequence the geometry is frozen and the texture is then generated for that previously generated geometry.

This is quite evident in this styling demonstration, where several geometries have different textures:

Is the quality of the results better? Comparing to DreamFusion, and other text-to-3D systems, sometimes yes and sometimes no. However, LATTE3D produces results almost 100X faster than DreamFusion. That speed advantage in itself is quite useful.

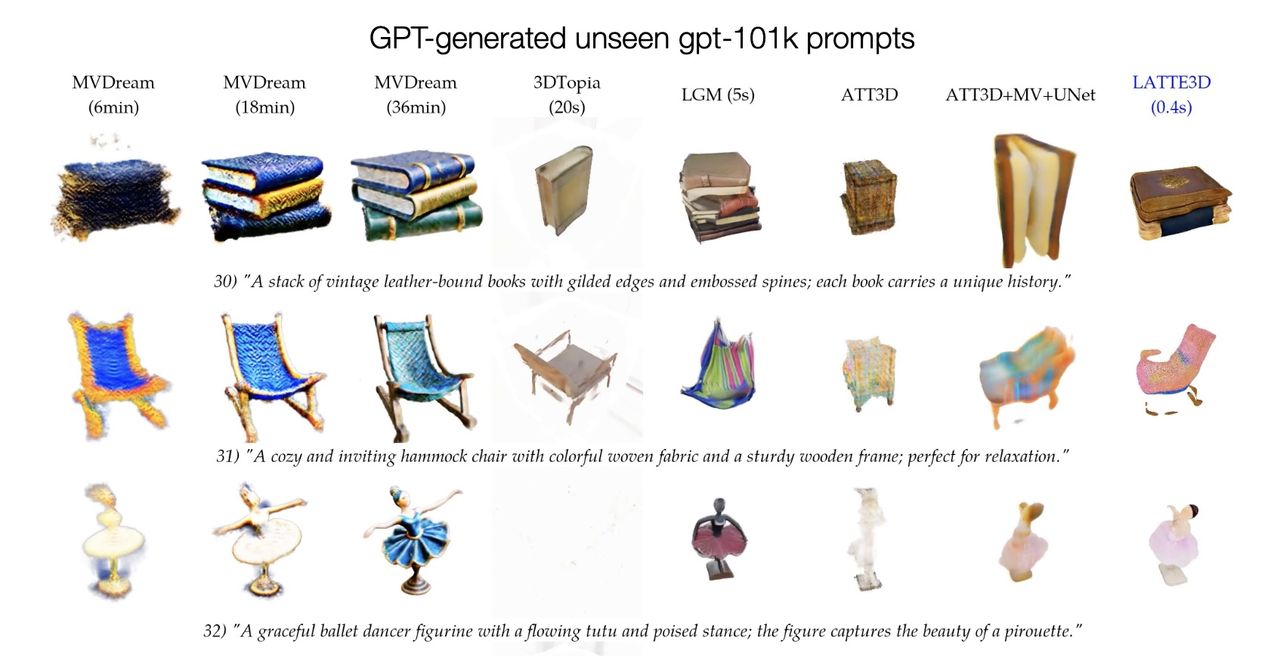

You can see some of the time and quality differences in this example:

LATTE3D also includes a post-generation “test-time optimization” step where a few minutes can dramatically increase the quality of the objects. This is the tradeoff: quality for time.

There’s also a method of “guiding” LATTE3D. If you input a point cloud, the system attempts to interpolate between the generated shape and the point cloud. This provides a significant amount of control by the user.

LATTE3D seems to be a pretty huge step forward. By making the processing far more efficient, it’s much more likely we will see services make use of the tool. My hope is that eventually text-to-3D systems will become so advanced that the need for CAD tools will diminish.

Via NVIDIA