Today we’re taking a look at Luma AI’s Imagine 3D, a text-to-3D service.

Imagine 3D Background

Text to 3D is exactly what it sounds like: it’s a new experimental AI service that accepts a text prompt and automatically generates a 3D model.

This is quite similar to the many text to image AI generators, except that you receive a 3D model instead of an image. However, some of the issues are the same, as you might expect.

Luma AI (once known as Luma Labs) appeared recently with a powerful smartphone 3D scanning system that uses NeRF technology. NeRF, or Neural Radiance Fields, is an AI-powered technology that accepts several images of a subject and can then generate new images from alternate angles.

Luma AI leveraged this capability into a 3D scanning system that is actually quite effective, and in my view will eventually overtake photogrammetry as the primary non-hardware 3D scanning approach.

The company then developed a system on top of that technology to generate full 3D models. This seems quite reasonable, given that a NeRF can already generate views from all angles.

I gave the system a test to see how ready it is for general use.

Imagine 3D Operations

Currently Imagine 3D is in alpha testing. In other words, it’s extremely early in its development lifecycle, so I knew it would not be complete by any means.

To use the system you must have an id on Luma AI’s system, which is easily obtained. You can sign up with either Apple or Google authentication, but strangely not just an email address.

That signup gives you access to the scanning app, but not yet Imagine 3D, which is a secondary application at Luma AI. In order to gain access to Imagine 3D, you must apply to get on the waitlist.

I did this months ago, and never heard back. Eventually I realized that you must get on the company’s Discord server, hit the Imagine 3D channel and ask to be activated. For me, this happened almost immediately, and I wondered why I hadn’t thought of asking weeks ago.

Using Imagine 3D is ridiculously simple, and I guess that’s the point: removing all the CAD work required to build a 3D model.

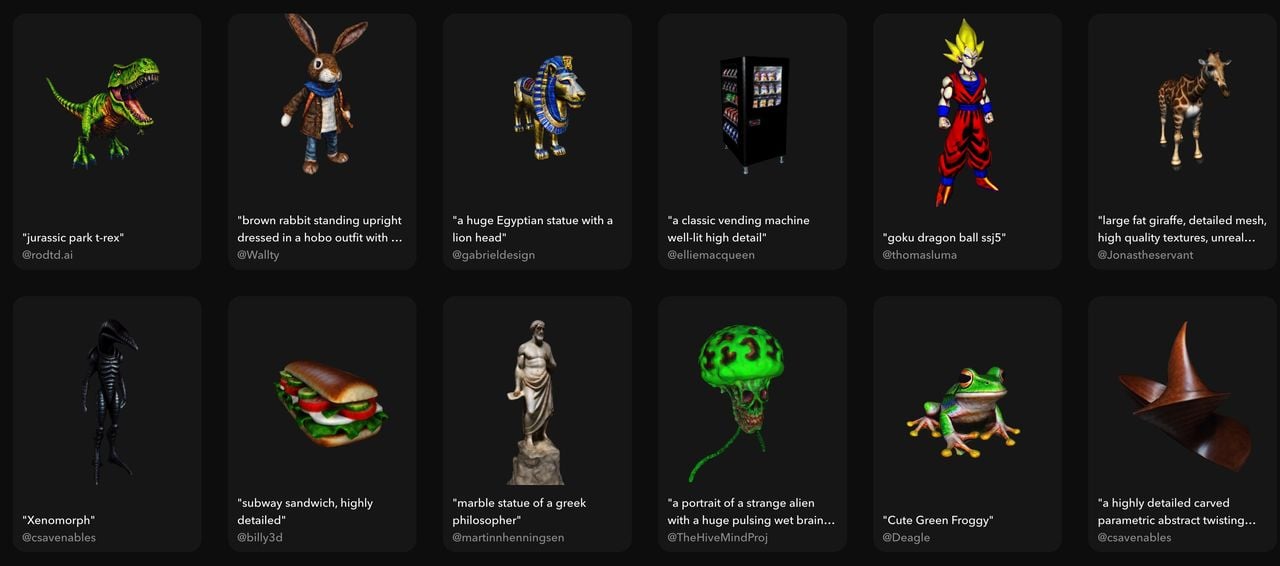

As you can see above, there’s simply a text input box and you type in your prompt. The prompts should be relatively simple, as it appears the AI model doesn’t seem to have a very wide range of possibilities.

After hitting the generate button, you then wait for the results to appear. Typically this is done in less than an hour, and it’s also possible to have more than one generation in progress at a time.

Once a generation is complete you are provided with a spinnable 3D view. As for 3D printing, you can download the generated 3D model in either .GLTF, .USDZ or .OBJ formats. My testing used the .OBJ format, as it was easiest to use with my 3D tools, and it did include the textures as well. Did I mention the generated models are full color?

The downloads are sent as a .ZIP file, which must be expanded to see the contents. Then you’ll need a 3D tool to open the relevant file format, and in my case I used MeshLab to open the .OBJ result.

Using Imagine 3D is extremely simple, once you have access. But what kind of results do you receive?

Imagine 3D Model Results

I tested Imagine 3D with several prompts, ranging from simple to complex. In all cases the results were, well, not perfect. However, they were also quite interesting. Let’s take a look at what happened.

The prompt “marble bust of winston churchill with cigar” came out quite well:

However, there was no cigar. I speculated that Imagine 3D would have problems combining things together.

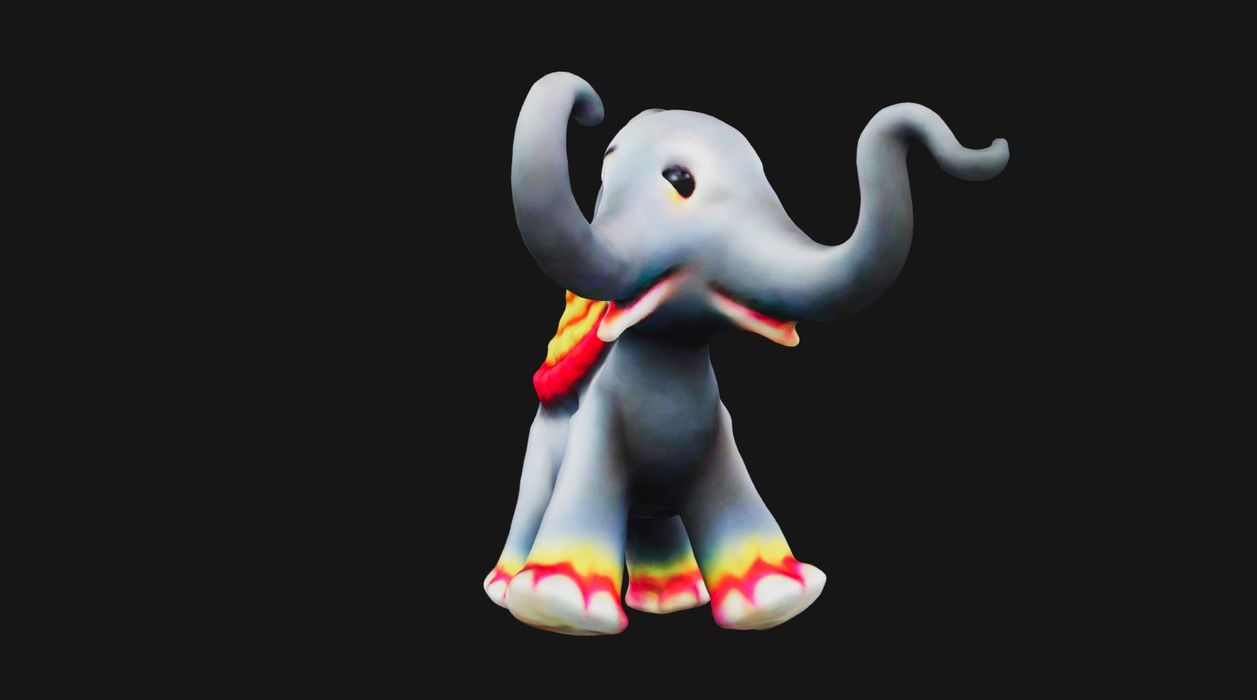

I then tried “a happy elephant with angel wings”, and got this, which at first looked pretty good — although no wings were present.

But wait, I spun the model around and realized this wasn’t a happy elephant at all, but in fact a nightmare elephant!

Yes, there were two trunks and three eyes, including a cyclops eye in-between the trunks. What a beast!

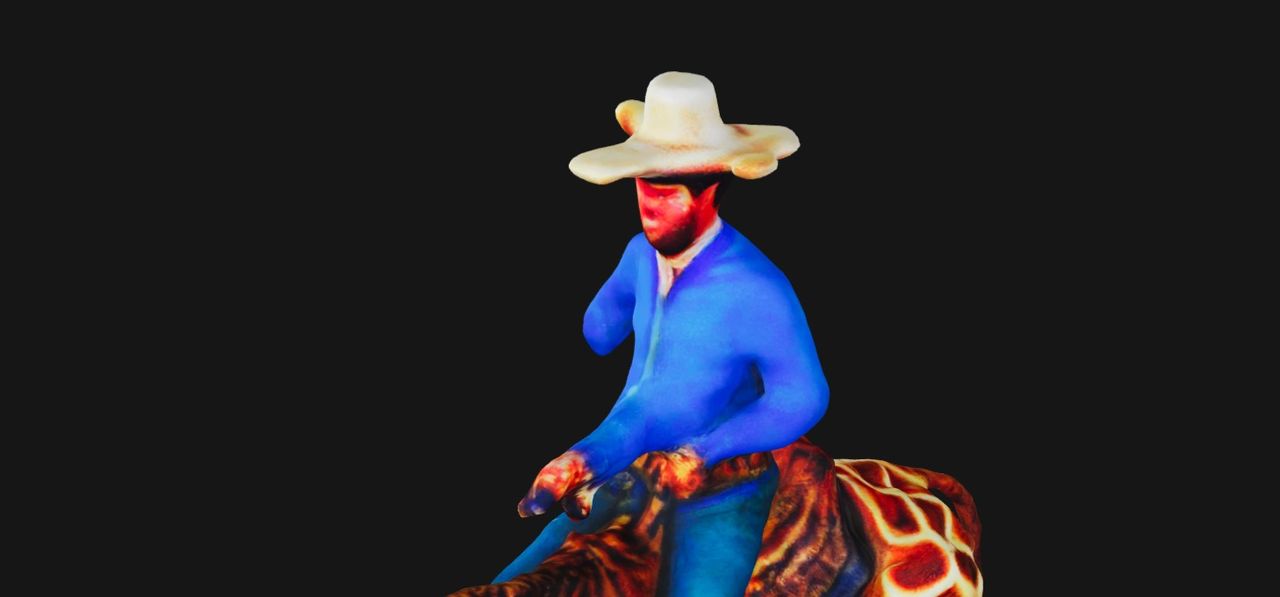

Now here’s “a cowboy riding a giraffe”:

It looks ok, until you spin it and realize our fellow has in fact three arms! Well, two and a half, anyway.

Could Imagine 3D produce two separate objects? I tried ””A polar bear and a pineapple”:

It seemed to work, until again I rotated the 3D model:

Imagine 3D seems to produce only one 3D model at a time, and here it simply mushes the two concepts together. I get the feeling that it is really doing a 2D style, where the 3D model matches the prompt — from specific viewing angles only — rather than the true intent of the prompt.

What about an abstract concept? I tried “The essence of life”:

Interesting, but I’m not sure what that has to do with life. Once more, I rotated it and discovered this:

It seems to have taken an image of a flower and extruded it. The flower could be an analogy for “life”, I suppose.

Imagine 3D Final Thoughts

As you can see from the results above, Imagine 3D isn’t really useful for generating specific 3D models, particularly with any measurements or detailed resolution. The system seems fairly limited in its knowledge of the world’s shapes, but can produce single object requests in many cases.

It is, however, quite fun to use and does produce some unexpected 3D models that can actually be 3D printed. You’ll never receive a result with a flat surface, however, so be prepared for a lot of support material.

To me the true benefit of Imagine 3D is a live example of what our 3D future could be like. Instead of toiling for hours in a 3D CAD system to create simple objects, one could instead just ask for them.

Sure, Imagine 3D is pretty rough. But it didn’t exist AT ALL only a few months ago. Let’s see where this technology can take us in the next few years, as this is only the beginning.

Via Imagine 3D

I was previously authorised by Luma AI to confirm that these 3D data could be printed on a Mimaki full-colour 3D printer.

As you point out, the 3D data is not yet complete.

But I am also very excited about this technology!