We managed to do an initial test of Apple’s new Object Capture feature.

As we wrote last week, Apple announced a new software API that provides direct access to powerful 3D scanning utility features. The service uses the photogrammetry approach, in which a series of images taken at different angles are interpreted into a full 3D model.

At the time I wrote the story, all we knew was what Apple mentioned during their very brief keynote description of Object Capture. It sounded enticing, but how does it actually work? How can you use it?

Over the past week I’ve learned a lot more about Object Capture.

First, the API is for Apple’s upcoming Monterey Mac OS release. This means that the APIs exist only on the Mac itself, and not on an iPhone, as far as we can tell.

The implication then is that the iPhone is used to capture the required images, which then must be transferred to a suitably upgraded Mac for processing. The Mac must not only be running Mac OS Monterey, but also be have the powerful M1 chip for processing.

In retrospect that’s not surprising. Photogrammetry is a very intensive compute application, and I have spent many hours waiting for photogrammetry software solutions to generate 3D models. It’s therefore questionable to expect a smartphone of any type to be able to perform that processing.

In fact, my previous story suggested they might be using a cloud-based solution to provide that processing, but it turns out no, the processing is done by the M1.

Having no Monterey-equipped M1 Mac on hand at the lab, I enlisted the assistance of someone who does: Fabbaloo reader Dan Blair of Bitspace Development, who just happened to be testing the Monterey beta software for his VR business.

I was able to capture images and send them to Dan, who ran them through a demonstration application using Object Capture from Apple. Here’s what we learned:

- Like any photogrammetry application, you need a ton of (like well over a hundred) quality images to obtain a decent 3D model from Object Capture

- Object Capture runs insanely fast, taking only a couple of minutes to process my 112 images

- Images should be taken with iPhone’s Portrait Mode, which includes depth information in each image

- Thin structures tend to be missed, but that is expected with most photogrammetry systems. It’s likely the depth camera doesn’t see them

- Object Capture is able to properly fill in gaps in the generated 3D model, and they are near-printable when complete

- Color texturing is provided, and are very good quality

- Exported data is in .OBJ format, which is easily converted into printable formats, such as .STL or .3MF

Regarding the API itself, Blair said:

“This is super accessible and easy for developers to use, but is for Mac only.”

How did our test proceed? Let’s look at the results. I chose to 3D scan a ceramic figurine, one that I had been using for photogrammetry experiments last year. This item is about 20cm tall.

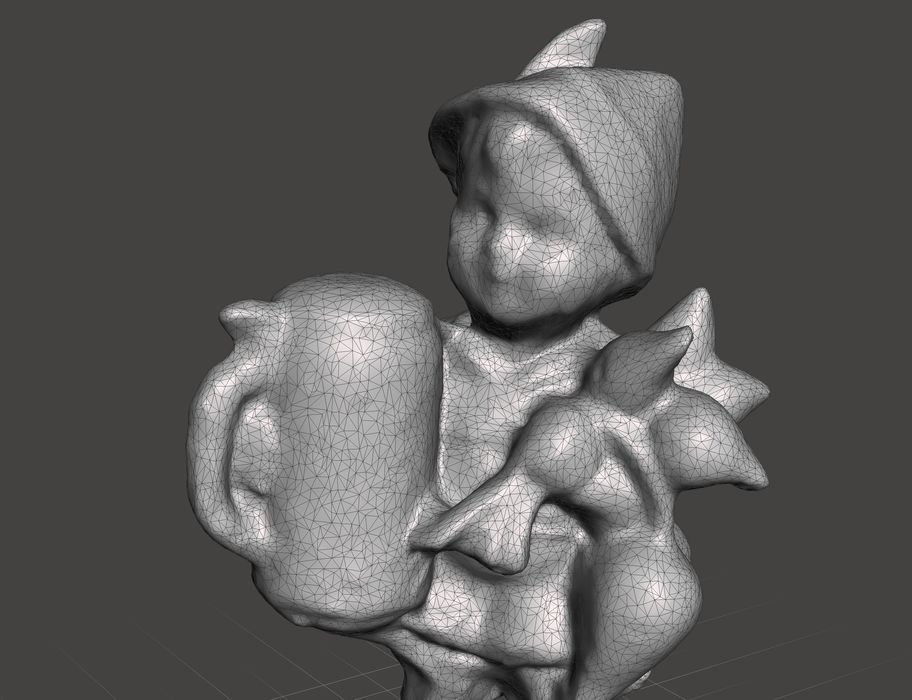

At top you can see the resulting 3D model produced by Apple’s Object Capture using 112 Portrait Mode images. I think it turned out exceptionally well. All holes are filled, there are no extraneous floaty bits and it’s generally a very clean scan. It’s almost as if someone has spent time to manually clean it up for you.

A closer inspection reveals some geometry issues, however. Take a look under the jug: the 3D model seems to have a flowing surface that isn’t quite the same as the figurine. I suspect because that area had few clear images because it was difficult to orient the camera on that spot. Apple’s Object Capture seems to have filled in the hole as best it could, and it didn’t do a bad job.

After seeing that fix, I suspect Object Capture is doing similar things with all other smaller gaps in data, and you can’t really tell where they were.

Here’s a view of the monochrome 3D model for comparison, and it’s quite good:

The 3D model generated for this item was about 33K faces, which is a good compromise between too-large high-detail and low-detail resolution.

The application Blair used was Apple’s demonstration app included in their Monterey system development kit. Its workflow does not represent how a proper 3D scanning system could be built with the API by another developer in the future.

One approach could be this: Develop a specialized smartphone app that would walk you through the object capture process. It could instruct you to shift, keep you from forgetting to scan the top or undersides, and organize the photos into a block for transfer.

A second application could exist on Mac OS Monterey that would automatically receive the image block and begin processing. Perhaps it could offer some sliders to allow the operator to process the images slightly differently, or even export in other formats.

Blair suggested it may even be possible to pipe the output directly to a 3D print slicer for immediate 3D printing. Another possibility is for Mac-based photogrammetry apps to switch their internal engine to use Apple’s Object Capture in future releases.

There’s much more to explore, and I’d like to do additional experiments. But the initial view seems to be that Object Capture is quite capable and will likely take over the photogrammetry world on the Mac platform.