Sketch2Pose provides an intriguing capability that might eventually lead to a new way of 3D modeling.

You probably haven’t heard of Sketch2Pose because it’s actually part of a research project taking place at the Université de Montréal.

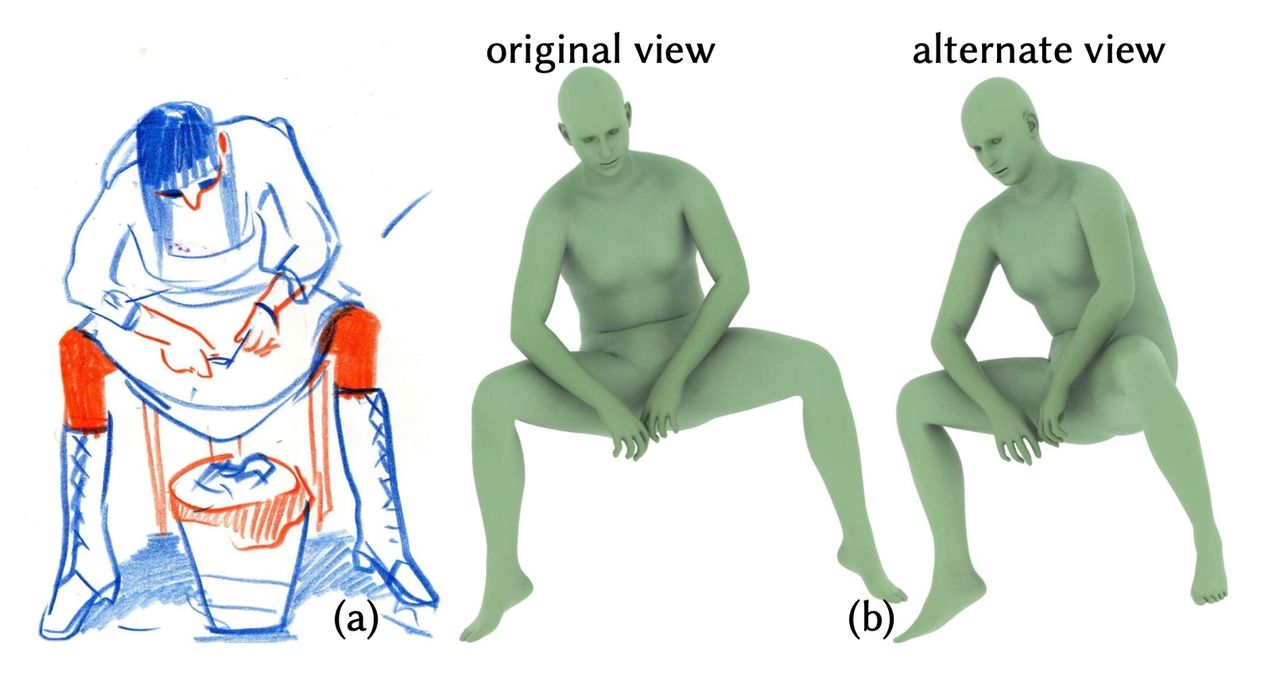

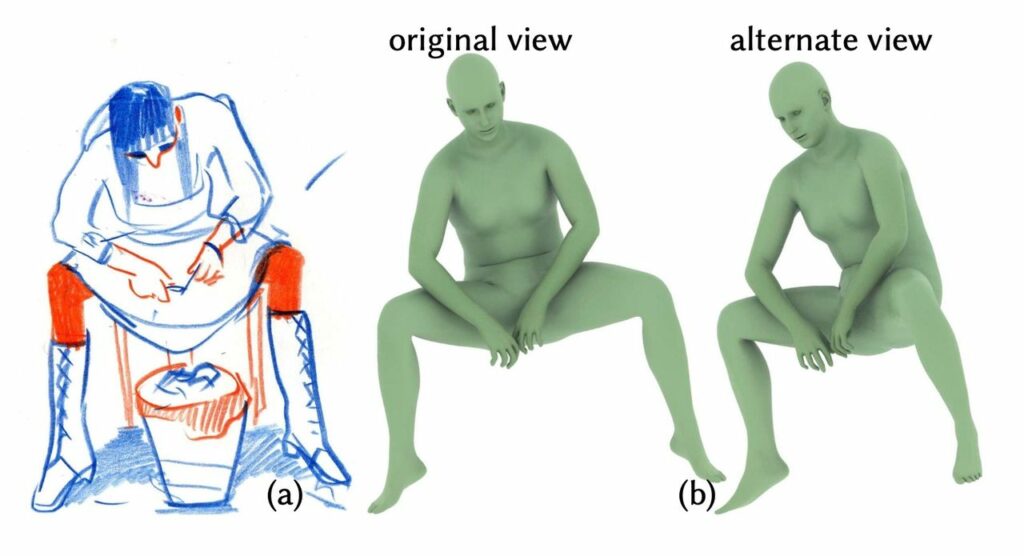

The tool is a kind of 2D to 3D converter. The idea is to have an artist sketch out a figure in a pose, and then Sketch2Pose uses its internal knowledge of anatomy to extrapolate the sketch into a posed 3D model. The sequence of images at top illustrate how this is supposed to work.

If this works consistently, it could dramatically shorten the effort required by 3D artists to convert storyboard sketches into 3D characters usable in games, films or otherwise.

This transformation seems complex, and I’m wondering how it’s actually done. The project explains:

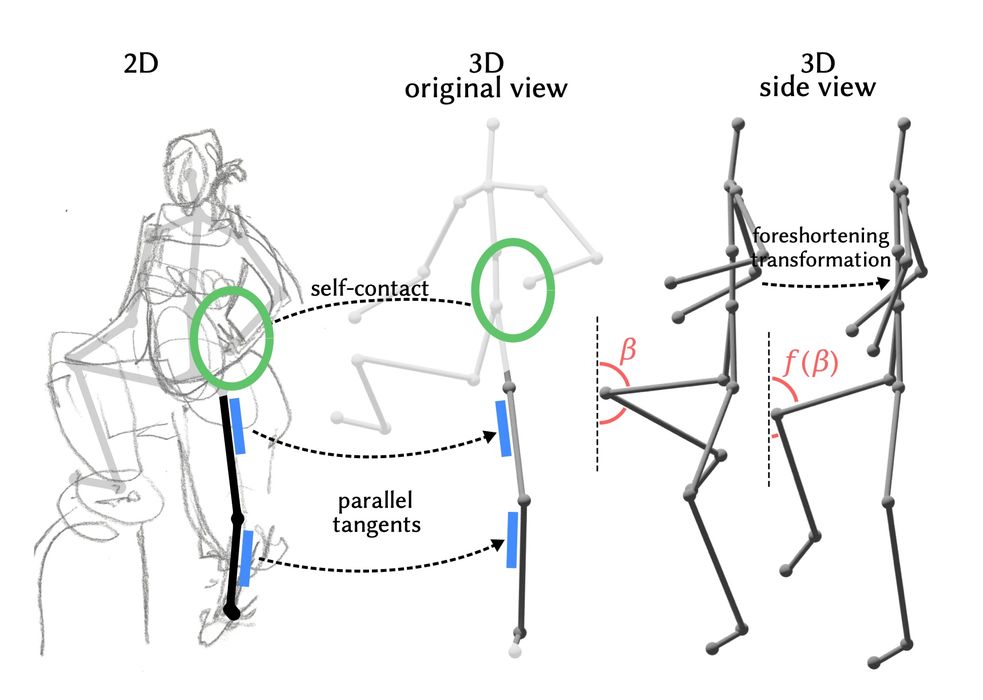

“Algorithmically interpreting bitmap sketches is challenging, as they contain significantly distorted proportions and foreshortening. We address this by predicting three key elements of a drawing, necessary to disambiguate the drawn poses: 2D bone tangents, self-contacts, and bone foreshortening. These elements are then leveraged in an optimization inferring the 3D character pose consistent with the artist’s intent. Our optimization balances cues derived from artistic literature and perception research to compensate for distorted character proportions.”

This is quite impressive: they are able to convert a rough sketch into something highly complex, consistent and usable.

In the world of 3D printing, Sketch2Pose isn’t directly useful, as it seems intended mostly for game builders. However, the process of how this is accomplished is rather interesting.

The researchers have defined a solution range that encompasses the possible poses of the human body. Then, the input sketch is mapped to a specific solution that hopefully matches the drawing’s intention. This only works because there is a fixed range of solutions.

Now let’s consider a long-term problem in 3D printing: acquiring a 3D model for printing.

There are today only a couple of ways of obtaining such a 3D model:

Buying or downloading one that’s been designed by someone else

3D scanning an item and hopefully the scan is printable (often it’s not)

Learning a CAD system and tedious building the 3D model from scratch or with a template

The latter two options are the best, yet are basically inaccessible for the vast majority of the population. This alone has presented a massive adoption barrier to 3D printing that has yet to be overcome.

What most people would need and could actually use is the ability to simply sketch out what they want and have a 3D model produced.

No such system exists.

But then, Sketch2Pose seems to do something very much like that. Could it or an adaption be used to generate 3D printable models?

I think this is actually possible, if the solution range is limited, just as is done with Sketch2Pose. Imagine something called “Sketch2Bolt”, which would accept a sketch of a bolt and then attempt to design a 3D model of a corresponding bolt.

The sketcher could draw the shape of the bolt head, ratio of length, perhaps even the dimensions, and Sketch2Bolt could generate the bolt 3D model in the same way Sketch2Pose generates a human pose 3D model.

Sketch2Bolt would make bolt 3D models, but not nuts. For that you’d need “Sketch2Nut”.

Then you can imagine a series of different “Sketch2” systems, each focusing on a particular type of mechanical part. If gathered together on a website, the user would simply pick the kind of object required and then provide a sketch. Custom 3D models could be quickly produced on demand for these parts, and then printed.

If such a system existed it could revolutionize consumer use of 3D printers. Today there are plenty of consumers using 3D printers, but most of them are simply producing more plastic dragons. What if they were suddenly energized to “create” their own parts whenever they needed by a simple sketch?

I think if that happened, there would be many, many more consumer 3D printers sold.