With the release of new hardware from Apple, it seems millions will have a LIDAR 3D scanner in their pockets or purses, but can it really be used for 3D scanning?

The LIDAR hardware first appeared in an iPad release earlier this year, and had many wondering what Apple was doing. However, as time has passed it’s become quite clear what’s going on.

LIDAR 3D Scanning

LIDAR, if you don’t know, is essentially a depth camera: it blasts out an array of light beams, and calculates the delay occurring for the reflection of each of those beams. This means that by converting the time delays into distances by dividing by the speed of light, they can create a 3D depth map of the current scene.

This is useful for Apple in a variety of ways, with the most important likely being for optical camera focusing. Instead of relying on a single point for distance focusing, the camera has a wealth of depth information for different portions of the scene. This allows the embedded AI software to do magical things with focusing as well as other tricks like blurred backgrounds for portraits.

Another feature enabled by LIDAR on mobile devices is the ability to perform much more accurate augmented reality (AR) apps. One, for example, allows you to place furniture from the IKEA catalog in the camera view. This means you can see what that table would look like in your living room. Similarly, you can measure the size of objects.

But there’s one more thing that can be done with this LIDAR hardware: 3D scanning. The concept here is that while the LIDAR system produces a single depth map, it’s possible to move the camera around to generate many LIDAR images that can be knitted together into a 3D model by software.

If that’s the case, then theoretically anyone with a LIDAR-equipped Apple device could capture 3D scans of anything, and that’s a big deal.

Or is it? Does LIDAR really enable easy 3D scanning, or is it as difficult as we 3D scanning veterans know?

Real-Life LIDAR Scanning

Keven Peters decided to find out, and produced a video of his experiences in 3D scanning, well, anything he found around him. He used his new iPhone 12 Pro, which is one of the devices equipped with LIDAR, to perform the scanning, and chose the free “3D Scanner App” to do so.

Peters found that he was unable to properly scan any smaller items, and this is likely due to the size of the LIDAR array. While many are likely familiar with the depth camera that performs face recognition, it turns out the LIDAR sensor has a far less dense beam array. These images from iFixIt show the difference.

Here’s the view of the infrared “dot array” from the iPhone’s front camera that’s used for facial recognition:

And here’s the view of the LIDAR dot array, note the difference:

You can now imagine the challenge of capturing detail with that kind of loose “net” gathering information.

Nevertheless, Peters persisted in his experiments, focusing instead on larger objects, like cars and buildings. Unfortunately, he found the reflectivity problem that annoys professional 3D scanners: light beams bounce off in peculiar directions and don’t get reflected back to the sensor, messing up the scan. In the video, Peters wanders the streets looking for cars with dull paint jobs for scanning subjects.

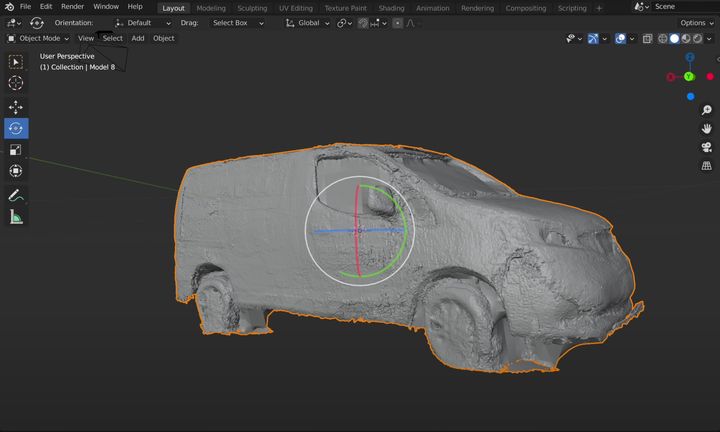

After capturing a few reasonable scans, Peters then used Blender to repair them sufficiently for 3D printing, and in fact was able to successfully 3D print replicas of some on his desktop 3D printer.

However, it is a lot more work than he imagined, and that’s almost always the case when one tries to perform 3D scanning. Because of this difficulty, it’s now unlikely that we’ll see an avalanche of printable 3D scans from the millions of LIDAR-equipped mobile phones.

Be sure to watch the video, as it takes you through the entire LIDAR 3D scanning experience.

One thought to close: there’s now a very good reason to NOT wash your car.

Via YouTube