Apple surprised with the announcement of a new feature that could allow any iOS device to easily capture 3D models.

Handheld 3D Scanning

This has long been a dream for me: having a competent 3D scanner in my pocket at all times. One could call up a scanning app on a whim and quickly capture a 3D model of a subject at any time.

In a way, that’s partly true at the moment, as there are third party apps that can perform 3D scanning using iPhones. Capture, Scandy, 3D Scanner App and a few others come to mind.

However, most of these current app options have challenges in using them. They may not make use of the recent LIDAR features to more accurately position 3D models in space, or perhaps they require the LIDAR feature, eliminating the possibility of using them on older devices. And by older, I mean last year’s models and even some current models.

Some of these apps are solely focused on augmented reality applications and you can’t easily extract a 3D printable model from them. Others will charge you per each download. It’s an inconsistent world, albeit one that does provide some promise for the future.

Apple Object Capture

That future may have arrived this week, as Apple snuck in a very intriguing announcement in the midst of their WWDC (World Wide Developer Conference) keynote. This event typically announces software features to be used by their legions of app developers.

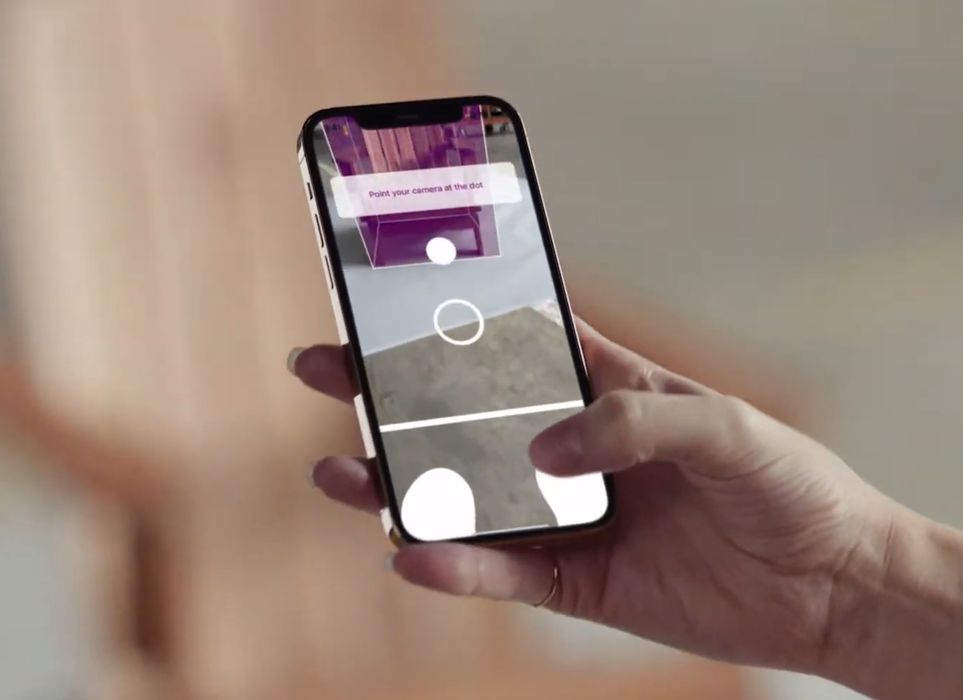

The feature announced is called “Object Capture”, and its intent is to allow device operators to capture 3D models of static subjects. It’s part of their RealityKit 2 API set.

We don’t know much about the feature yet, but Apple explained in their keynote that Object Capture uses photogrammetry technology — the process of interpreting a series of differently-angled images of a subject. These images can be transformed into a full and textured 3D model.

But let me be perfectly clear: Object Capture is NOT an app. It is instead an API. An API is an interface to the operating system that an app can invoke to trigger some services. In this case, the Object Capture API will trigger photogrammetry processing.

This means that app developers can now very easily create 3D scanning apps by merely firing a set of images at the Object Capture API. Previously scanning app developers would have to do all the heavy lifting themselves, and this is one reason why all the apps are quite different.

Now with a centralized and standardized photogrammetry API, we can expect to see more consistently performing 3D scanning apps to quickly appear, and existing apps to adapt to the new capabilities.

Object Capture Speed

In their announcement Apple explained that a series of images would have to be captured, and the 3D model would be created “within minutes”. It’s not clear whether this processing is taking place on board the device (unlikely) or instead in the cloud where Apple can muster vast processing resources.

Photogrammetry is not a lightweight application, and requires considerable processing power. Typical photogrammetry apps require fat graphics cards used for iterating through the millions of points obtained with scan data. This is why I suspect Object Capture is a bridge into Apple’s cloud-based servers.

Apple showed two applications of Object Capture. One involved Maxon’s Cinema 4D 3D modeling tool, where images were apparently being transformed into a 3D model that could be then imported into a design.

Another app involved a shopping system where operators could quickly scan products and turn them into AR-capable 3D models for display at customer sites. The speed of the API allows the user to verify a correct scan even before they leave the subject, a capability not usually possible with today’s photogrammetry apps.

Neither of these apps are the fabled handheld 3D scanner I’m looking for, but they show that the API works and could thus be used by a developer to create a true, powerful handheld 3D scanning app. At some point, perhaps even now, an app developer or two will be creating new and very useful scanning apps.

Now the waiting begins.

Via Apple