Somehow I hadn’t seen the FDM Assessment Protocol for quite a while, but maybe you haven’t either.

The FDM Assessment Protocol is intended to be a universal testing procedure for FFF 3D printers.

Why do we need a universal testing procedure? The demand for one arose because of an avalanche of seemingly identical low-cost FFF 3D printers on the market a few years ago, and there were no truly sound methods of comparing them.

Many new machines had been appearing on Kickstarter, sometimes weekly, and there were few ways to gauge the quality of this equipment, let alone the company behind them.

Sure, there are useful benchmarks, such as the #3DBenchy, which is so often one of the first 3D prints to be done on a new machine. The “Benchy” is an object that just happens to incorporate a number of geometric elements that allow for testing.

However, it’s not really suitable for comparative testing, even while it is useful for an individual trying to dial in a new machine or material. There are similar benchmark objects that can be used to help identify the optimum parameters for a given setup.

But these are for dialing in, not for comparing results. How could you properly compare two Benchies from different printers? It would really be quite a subjective process.

The FDM Assessment Protocol, begun by Andreas Bastian in 2018, attempts to be far more objective. It’s a combination of material, object and process.

The overall process is to 3D print a specific test object with a known material, ESUN PLA+ Cool White, with default settings for the machine. Then a detailed process is used to measure and score the results of the print on fixed scales. In the end a single score is totaled for the print, representing the quality of the test for that machine.

The FDM Assessment Protocol measures these factors:

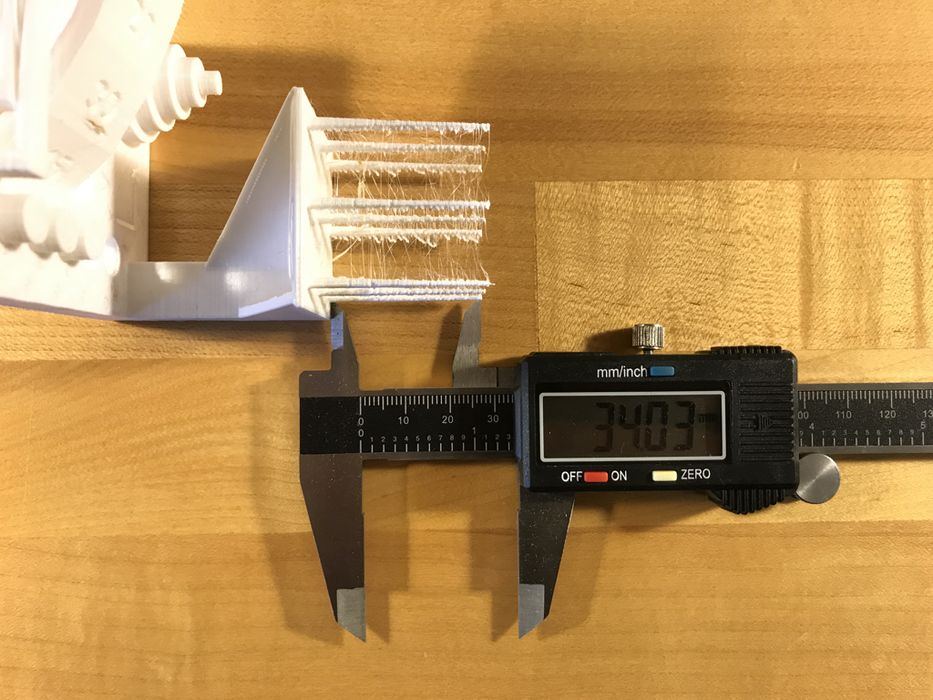

- Dimensional accuracy

- Negative feature resolution

- Positive feature resolution/fine flow control

- Basic overhang capabilities

- Basic bridging capabilities

- XY ringing

- Z-axis alignment

But it does NOT measure these factors:

- Warp/adhesion

- Overhang capabilities over a range of features (concave, convex, etc)

- Slicer handling of fine XY plane features (positive and negative space)

- Complex bridging situations

- Surface finish over a range of features

- Extrusion temperature optimization

- Support structure/geometry interaction

As you can imagine, testing and measure those above could be extremely challenging given the variety of hardware and software involved. However, the initial list of actually measured factors is still quite useful and really can generate a comparative number for a machine.

Even so, you probably haven’t seen a score from this system recently. I happened to be reminded of the system because it was used during the launch of Anker’s M5 3D printer last week, where the new machine apparently received a perfect score. I do wonder, however, if Anker actually used the default settings for their testing, or whether they performed repeated runs with more optimal settings for the test object?

Why don’t we see scores for many machines? Wouldn’t it be useful to have them for every device?

It would certainly be useful, but the process of generating them is tedious, and perhaps many don’t want to take the effort to do so. In particular, machines that score poorly would not have management encouraging the publishing of the results.

There’s another concern: even if a machine scored poorly, that could be due to the default settings. Every machine can certainly be tweaked with different print parameters to produce different quality output. So what then does a score based on the defaults really mean?

Via GitHub