In a world with many 3D printers to choose from and no easy way to compare them, what should you do? They use different materials in different ways, are priced differently and produce objects of differing quality.

This dilemma has been taken on by researcher Shane Ryan, who proposes what he calls, “The Rho Test”. It’s a mathematical formula that attempts to combine these critical evaluation factors:

- Speed

- Quality

- Cost

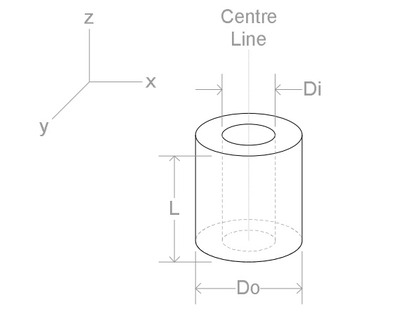

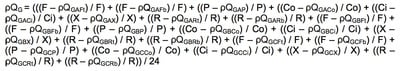

The test involves printing an official test object (above) in several ways while timing and costing the operation. The method also includes detailed instructions on how to measure the quality of the object. All of this gathered information is combined in a formula (a portion of which is shown below) to result in a set of three numbers representing the factors above. Theoretically these values can be directly compared between 3D printers.

While these factors could be useful for comparison, they don’t account for some factors, such as potential build volume, material characteristics or machine reliability. There are other even less defined factors that could be important, such as machine and material availability, support programs and community strength.

Do you think the Rho Test could work?

Via Sikal (PDF)

The effort, thought, and discussion that went into that document are far more valuable than the formula that emerged. Indeed, I contend that the Rho Test V1 actually exhibits negative equity because it purports to give a metric that can be compared across basically-different technologies, with the authors giving SLA and FDM as examples. Employing the Rho metric to compare different build technologies would be far more misleading than useful.

1. When dealing with basically-different technologies, the attributes of the different build materials would trump any differences measured by the Rho test. A finished part is made entirely of whatever build material that specific process requires, and although there might be a selection of materials there's typically no overlap: FDM exclusively uses thermoplastics, it can't use thermosetting resins. The authors casually compare FDM and SLA materials without acknowledging SLA exclusively requires curable thermoset resins–SLA can't use thermoplastics. Some materials in one category might simulate the properties of the other category, but they aren't really comparable nor interchangeable. For instance, Accura 25 SLA resin is promoted to "simulate low-end ABS" but it's not compatible with ABS finishing techniques and it can't be welded or chemically-bonded to ABS. These are overriding traits, but the Rho score wouldn't account for them.

2. Conversely, Rho's measured attributes (Speed, Quality, Cost) are affected by factors that are totally incomparable between different technologies–there's only one Rho test object, so it doesn't capture any factors that might reflect or predict those differences between different build technologies. For example, FDM is slower to build a solid object than a hollow one, whereas LOM (the technology my machine uses) builds solid objects much faster than hollow ones, and DLP-based technologies build solid objects and hollow objects at exactly the same rate. Likewise the Rho test object exclusively has purely-vertical and purely-horizontal walls, without any intermediate slopes, which would give artificially worst-case ρQF for FDM and artificially best-case ρQF for LOM, yielding Rho Quality scores that don't reflect real-world geometry and are inherently misleading between different technologies.

At best the Rho test might help make comparisons within a single category–that's an ambitious enough goal, but the Rho test has potential value. But the authors are unnecessarily overshooting the mark when they suggest using it to compare inherently-different build technologies, like SLA and FDM. I rather suspect the reference to FDM vs SLA material was a thoughtless addition to the paper, perhaps an impulsive attempt to broaden its scope, which inadvertently weakens its message.