The process seems straightforward:

- Design a model with 3D Modeling software

- Tweak the 3D model for printing suitability

- Send the 3D model to a 3D printer

- Play cards while the printer buzzes

- Enjoy your printed model

The fundamental element of this sequence is the 3D model itself, that digital artifact that represents the desired object. We spend time creating them, modifying them, sharing them.

But is there another way?

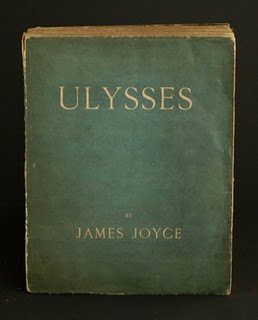

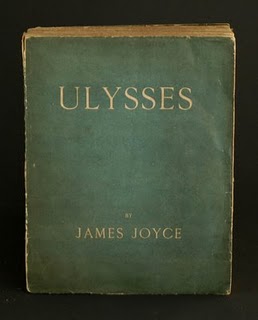

BLDG BLOG speculates of another approach involving the interpretation of a narrative. They ask us to consider the classic novel, Ulysses by James Joyce, in which the city of Dublin is described in intricate detail. They then ask the questions:

After all, how do you map the city down to its every last conceivable detail? And what if cartography is not the most appropriate tool to use?

What if narrative – endlessly diverting narrative, latching onto distractions in every passing window and side-street, with no possible conversation or observation omitted – is the best way to diagram the urban world?

And then:

I’d actually suggest that the narrative position just outlined actually describes not a person at all but a surveillance camera – that is, the Ulysses of the 21st century would actually be produced via CCTV: it would be Total Information Awareness in narrative form.

And:

What if Ulysses had been written before the construction of Dublin? That is, what if Dublin did not, in fact, precede and inspire Joyce’s novel, but the city had, itself, actually been derived from Joyce’s book?

Finally:

If you fed Ulysses into a milling machine – that is, if you input not a CAD file but a massive Microsoft Word document containing the complete text of Ulysses – what might be the spatial result? Would the streets and pubs and bedrooms and stairwells of Dublin be milled from a single block of wood?

What if you fed Ulysses through a 3D printer?

Whew! That’s slightly mind-blowing. Impractical, yes. Feasible? Not today. But would this ever be possible? Would it be conceivable that a sufficiently advanced software system could interpret a narrative, fill in gaps using conventional knowledge to arrive at a useful 3D model?

We’ve written in the past about the need for vast repositories of models to enable everyday folks to get past the difficult design phase of the object creation sequence. But this is well beyond that, where an intelligent agent interprets desires and uses repositories of designs itself to produce the model.

Definitely not today, or tomorrow. But food for thought.

Via BLDG BLOG

I only read BLDGBLOG for the pictures and not the articles.

There's a line which separates interesting-ideas from intellectual-masturbation, and BLDGBLOG crosses that line frequently.

-S

Sure, it's possible. All you need is strong general AI.

Stupid. This kind of shit is exactly why I don't like BLDG blog. I just subscribed to fabbaloo, and I'm starting to regret it.