A new approach to point cloud processing could dramatically enhance the accuracy of complex 3D scans.

3D scanning equipment has never been more powerful and accessible, but there are limits to what can be achieved. When scanning highly complex objects, some of the details can get lost as they aren’t properly resolved during scan processing.

Researchers have developed a couple of new algorithms that should greatly assist the interpretation of 3D scan data.

Let’s review what’s going on here first. All 3D scans must traverse several stages:

- Scan data is collected from a subject using a 3D scanner. The scanner can use a variety of techniques, ranging from photogrammetry to lasers to structured light

- The data is converted into a point cloud, which is a vast set of points in 3D space

- The point cloud is analyzed to identify surfaces, curves and edges using sophisticated algorithms

- The identified surfaces are then joined into (hopefully) a solid 3D model

- The model can then be exported in a mesh format such as STL or 3MF for 3D printing or other activities

As you can see, the key step in the process isn’t actually the scanning; it’s the analysis of the point cloud. If that doesn’t work well, then the 3D model won’t be as accurate as it could be.

The new research has developed two additional algorithms for this step: dual 3D edge extraction and opacity–color gradation.

Edges are typically quite challenging to identify in a point cloud, particularly if they are sharp, or aligned with a curve. The new algorithm is more able to handle these situations. The researchers explain:

“Dual 3D edge extraction incorporates not only highly curved sharp edges but also less curved soft edges into the edge-highlighting visualization. Real-world objects, as captured through scanning, usually have numerous soft edges in addition to sharp edges as part of their 3D structures. Therefore, to sufficiently represent the structural characteristics of scanned objects, visualization should encompass both types of 3D edges: sharp and soft edges.”

Opacity-color gradation is explained:

“Opacity–color gradation renders soft edges with gradations in both color and opacity, i.e., with gradations in the RGBA space. This gradation achieves a comprehensive display of fine 3D structures recorded within the soft edges. This gradation also realizes the halo effect, which enhances the areas around sharp edges, leading to improved resolution and depth perception of 3D edges. The gradation in opacity is achieved using stochastic point-based rendering (SPBR), the high-quality transparent visualization method used for 3D scanned point cloud data.”

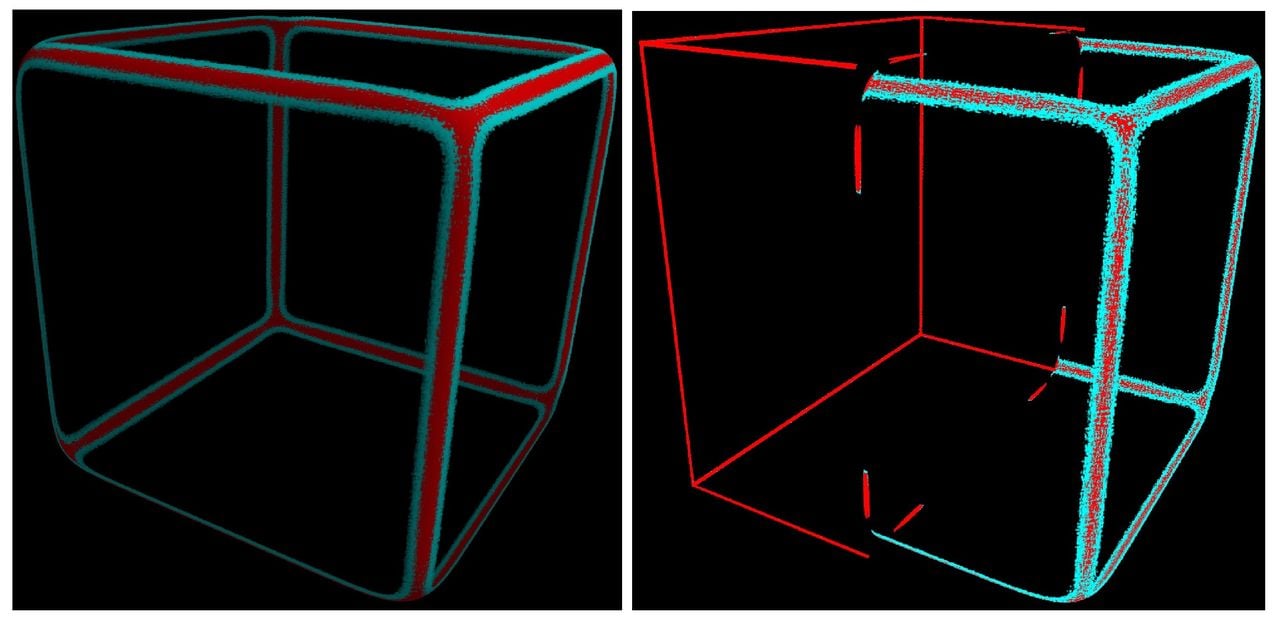

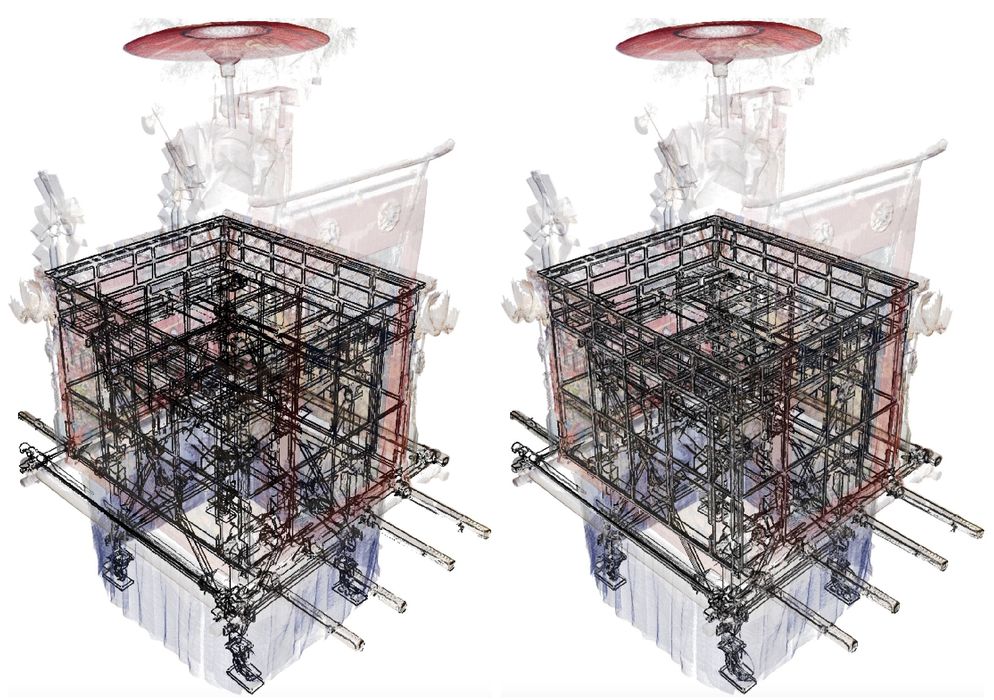

There’s a lot of math behind these algorithms, but do they work? Consider this example:

In this image we see an incredibly complex 3D scan on the left processed using conventional algorithms. The general shape is resolvable, but now look at the right image, which uses the new algorithms. There is considerably more detail and resolution of edges in the result.

The new method seems to be quite powerful, but does it require a massive amount of processing to complete? The researchers explain:

“For this work, computations for dual 3D edge extraction and the visualization processes were executed on our PC (MacBook Pro) with an Apple M2 Max chipset, a 38-core GPU, and 96 GB of memory. The computation speed depends on the CPU selection, while the number of points that can be handled depends on the main memory and GPU memory. (We also confirmed that data consisting of 108 3D points could be effectively handled even on a less powerful laptop PC with a 3.07 GHz Intel Core i7 processor, 8 GB of RAM, and an NVIDIA GeForce GT 480M GPU.)”

And:

“The computational time of each method depends on the point distribution of the target data. However, in any case, our proposed dual 3D edge extraction requires a computational time nearly comparable to that of the conventional binary 3D edge extraction.”

It seems to me that any 3D scanner manufacturer might want to take a close look these developments and attempt to incorporate them into their software tools.

We could see a dramatic leap in scan accuracy in coming years.

Via MDPI