Researchers have developed a powerful new method for hole-filling for incomplete 3D scans.

If you’ve ever used a 3D scanner, you will know that the scans obtained are almost always incomplete. This is because of limitations of the hardware, surface color, or even an inability to “reach” certain areas of the subject.

Post processing scan software sometimes attempts to fill the holes and missing regions, but in my experience the results are usually less than optimal. Simple holes on flat surfaces are easily filled, but more complex geometric situations are often beyond the capability of current algorithms.

The classic case here occurs when 3D scanning a human head: the top of the head is missing in the scan results. Attempts to “fill the hole” usually end up with an awful flat surface on the head, as if someone sawed off part of their brain. This is not usable, and you’ll probably be doing 3D editing to tediously reconstruct the missing surfaces.

Another scenario is using “make solid” repair functions. Typically they do indeed make the model solid, but in the process lose a huge amount of scan detail.

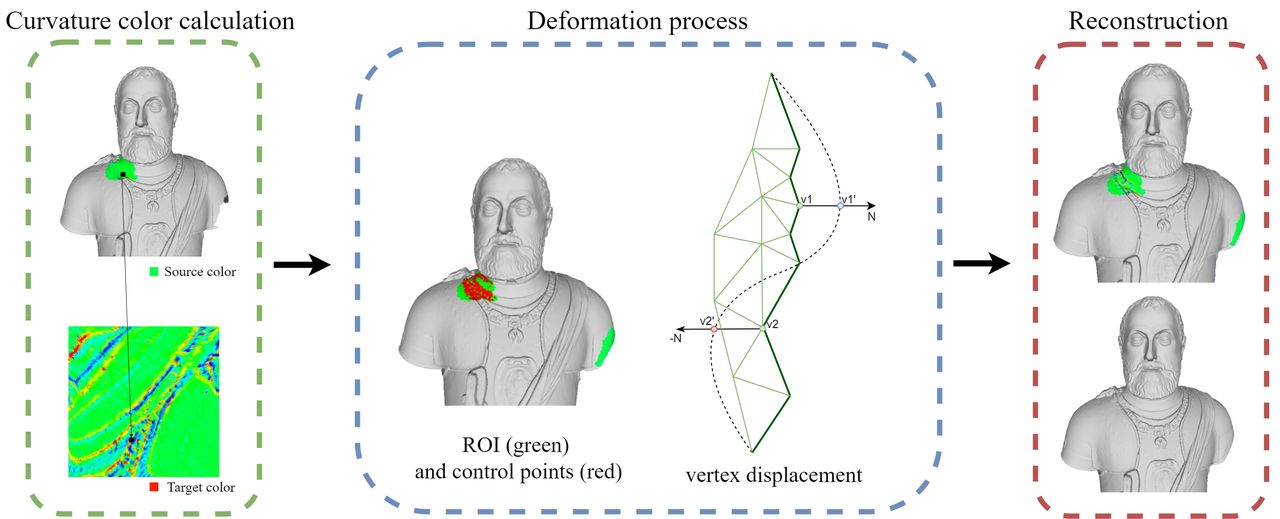

The researchers wanted to find a way to engage advanced algorithms to solve this problem. They used a neural network approach to implement a kind of 2D Inpainting method for hole filling.

Inpainting is a common function in today’s image editing software. Inpainting will focus on a region and fill it with “what is supposed to be there”, based on an analysis of the remainder of the image, particularly the immediately surrounding area.

The researchers realized that the surfaces of a 3D scan might be fixable in a similar manner.

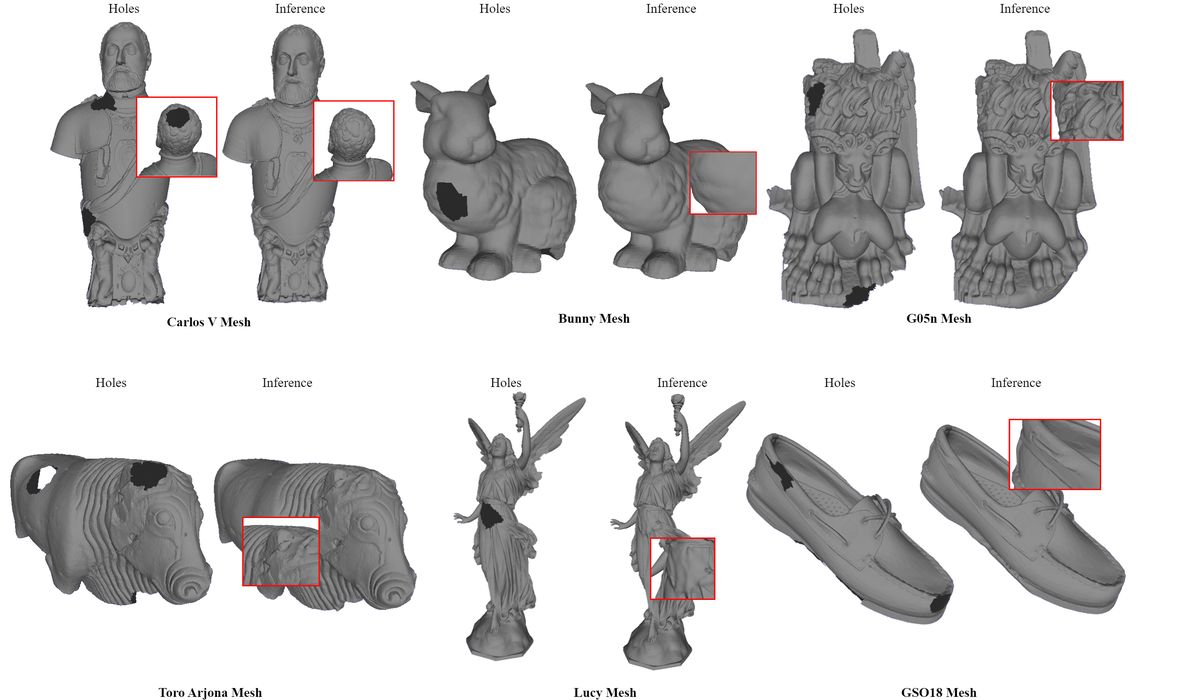

They developed a huge library of “broken” 3D models for training. They used complete 3D models from a variety of public sources, and corrupted them with a script to introduce holes of varying sizes and geometries.

Their neural networks were then trained on the transformation from “holed” to “complete” using the two sets of models. They explain:

“To address this issue, we propose a technique that incorporates neural network-based 2D inpainting to effectively reconstruct 3D surfaces. Our customized neural networks were trained on a dataset containing over 1 million curvature images. These images show the curvature of vertices as planar representations in 2D. Furthermore, we used a coarse-to-fine surface deformation technique to improve the accuracy of the reconstructed pictures and assure surface adaptability. This strategy enables the system to learn and generalize patterns from input data, resulting in the development of precise and comprehensive three-dimensional surfaces.”

This resulted in a neural net that can accept a random 3D scan and make it properly solid without losing significant detail and able to handle complex situations.

”Our method consistently demonstrates a significantly reduced maximum distance in comparison to alternative approaches when the Hausdorff distance is taken into account. This finding implies that our methodology is proficient in mitigating substantial errors within the reconstructed meshes. In addition, the mean distance metric consistently outperforms other methods, suggesting that our reconstructions produce more consistent and stable results across the test models.

The effectiveness of our method in reducing significant errors (as indicated by the smaller maximum distance) while maintaining overall stability and accuracy (as indicated by the smaller mean distance) across a variety of test scenarios is underscored by these results.”

This is incredibly good news for 3D scanning. If this technology is commercialized and integrated into 3D scanning software it would greatly improve scan results. It would also potentially speed up the scanning process as systems need not capture the entire shape.

However, there is one possible issue: it seems that their process does take time to process. They explain:

“We opted to establish a restricted inference time of 9 minutes for running our inpainting neural networks. This choice allows for equitable comparisons between our approach and other cutting-edge methods. The hole completion of our complete dataset, which includes 959 models, was exceuted in approximately one week, as each mesh correction operation needs a maximum of nine minutes.”

That seems long for an automated process, but on the other hand, manual reconstructions can take far longer and would be more expensive.

Via ArXiv