Charles R. Goulding and Andressa Bonafe investigate how NVIDIA’s pivot to generative physical AI, innovative platforms like Jetson Thor, and advances in 3D printing are revolutionizing the robotics industry.

NVIDIA, the world’s most valuable semiconductor company, is positioning itself at the forefront of the robotics revolution. Renowned for its leading position in AI chipmaking, NVIDIA has shifted its focus to a burgeoning area it calls “physical AI,” which encompasses robotics and humanoid systems. This pivot is based on the company’s vision of generative physical AI, which aims to combine advancements in AI with robotics to create autonomous machines capable of interacting seamlessly with their environments and with humans. Generative physical AI refers to systems that not only process data but also act upon it in physical spaces, enabling robots to adapt, learn, and perform complex tasks with a degree of intelligence that mirrors human cognition.

NVIDIA’s focus on physical AI comes as competition intensifies in its core AI chip business. To maintain its edge, NVIDIA is developing innovative technologies, including its next-generation Jetson Thor computing platform, set for launch in the first half of 2025. This initiative aligns with the company’s broader strategy to provide the computational backbone for advanced robotics, enabling new levels of autonomy and interaction between robots and humans.

Technical Breakthroughs Driving Physical AI

The timing for NVIDIA’s focus on physical AI is driven by several key technical breakthroughs. The advancements in generative AI, foundational models, and simulation environments have reached a tipping point, as noted by Deepu Talla, NVIDIA’s vice president of robotics and edge computing in a recent Financial Times article. These breakthroughs enable robots to learn from their surroundings, adapt to dynamic environments, and bridge the historical “Sim-to-Real gap” that has hindered seamless deployment from virtual training to real-world application. NVIDIA’s Omniverse platform exemplifies this synergy, allowing developers to train robots in highly realistic virtual settings before deploying them in real-world scenarios. By addressing these challenges, NVIDIA ensures that robots trained in simulated environments can operate reliably and efficiently in complex, real-world tasks.

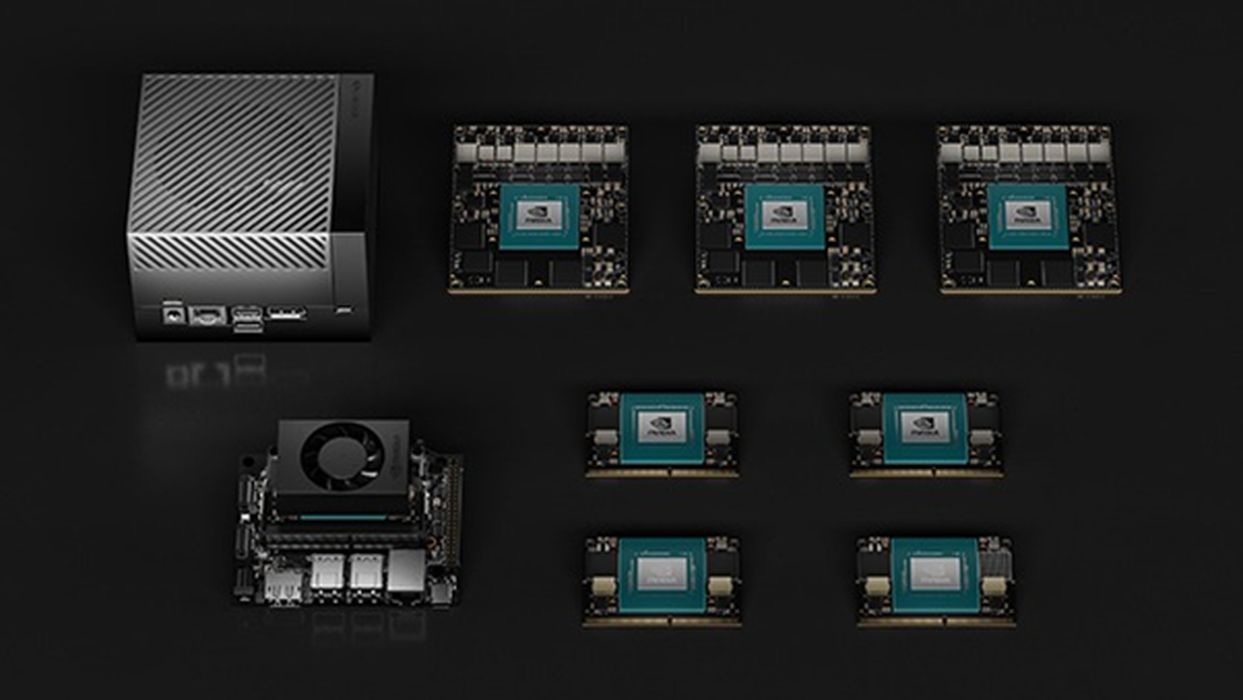

Jetson Thor: A Game-Changer for Robotics

Jetson Thor represents a critical milestone in NVIDIA’s efforts to fuel the robotics industry. As the latest addition to the company’s Jetson platform—a series of compact computers celebrated for their AI capabilities—this iteration is uniquely tailored for robotics applications. Jetson embedded systems are renowned for their ability to deliver exceptional performance while maintaining energy efficiency, making them ideal for edge AI deployments. The Jetson Thor builds on this legacy with cutting-edge advancements in parallel processing, enabling faster execution of AI workloads critical for real-time robotics. This next-generation computing platform is designed to handle complex tasks while enabling safe and natural interactions with both people and machines. Its modular architecture is specifically optimized for performance, efficiency, and compactness.

The Jetson platform supports a comprehensive suite of tools, including the NVIDIA DeepStream SDK, which allows developers to create applications for intelligent video analytics and sensor fusion. These capabilities are vital for robots to process inputs from multiple sensors, such as cameras and LiDAR, and to make decisions autonomously. Additionally, Jetson devices integrate seamlessly with NVIDIA’s CUDA libraries, enabling developers to optimize AI models for enhanced performance. This combination of flexibility and power promises to make Jetson Thor a pivotal tool for the next generation of humanoid robots.

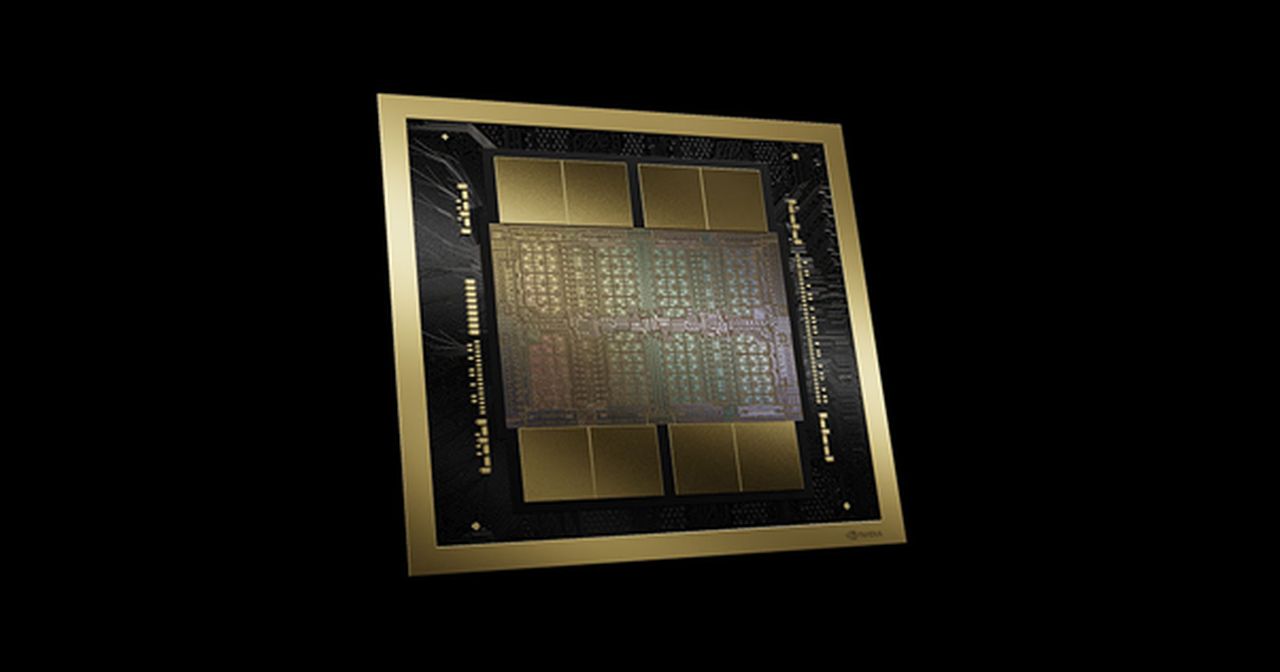

Blackwell Architecture: Powering the Next Generation

NVIDIA’s recently announced Blackwell architecture adds another dimension to its AI and robotics advancements. This next-generation GPU architecture introduces innovations in performance, energy efficiency, and scalability. Blackwell is designed to handle the immense computational demands of generative AI, robotics, and other high-performance applications. It achieves this through enhanced tensor core technology, improved memory bandwidth, and the ability to scale across various deployment scenarios, from data centers to edge computing environments. These advancements make it possible for robotics platforms like Jetson Thor to process complex real-time data and AI workloads with unprecedented speed and precision.

The Isaac Platform: Bridging Software and Hardware

Beyond its hardware offerings, NVIDIA’s Isaac robotics platform strengthens its position as a trailblazer in physical AI. The Isaac platform offers a complete ecosystem for robotics development, including Isaac SDK, which provides a collection of accelerated libraries, tools, and reference applications for AI-powered robots. The platform also features Isaac ROS, enabling seamless integration of AI models and computer vision into the Robot Operating System (ROS) framework. Additionally, Isaac Sim, a state-of-the-art simulation tool, allows developers to train, test, and validate robotics applications in highly realistic virtual environments.

One of the standout capabilities of Isaac Sim is its ability to close the “Sim-to-Real gap.” By replicating physical environments with unparalleled accuracy, developers can create simulations that closely mimic real-world conditions, ensuring that robots perform as expected when deployed. The platform also supports collaborative multi-robot systems, making it invaluable for industries such as logistics, manufacturing, and autonomous delivery.

By offering these integrated tools, NVIDIA empowers robotics developers to tackle complex challenges such as sensor integration, motion planning, and AI-based decision-making. The Isaac platform bridges the gap between software and hardware, providing a scalable and flexible solution for production-ready robots.

3D Printing: Accelerating the Robotics Revolution

3D printing can help open the way for the physical AI revolution by enabling rapid prototyping and customization of robotic parts. Engineers can iterate designs quickly, ensuring seamless integration between AI systems like Jetson and physical hardware. From producing specialized grippers and actuators for collaborative robots to crafting modular components for advanced cooling systems, 3D printing fosters innovation and affordability across a myriad of applications.

NVIDIA’s technologies, including the Omniverse, Neuralangelo, and LATTE3D, have contributed to advancing 3D scanning and design in various ways. While the Omniverse facilitates collaborative workflows and virtual environments, Neuralangelo enables highly detailed surface reconstruction from image or video data, and LATTE3D accelerates 3D model generation, providing rapid prototyping capabilities critical for robotics and AI development.

NVIDIA’s investments in metal 3D printing highlight its strategic focus on advancing physical AI through additive manufacturing. The company has backed Freeform, which utilizes AI-driven autonomous systems to create high-performance metal components for robotics. Additionally, NVIDIA led a $99 million funding round for Seurat Technologies, a startup specializing in scalable, laser-based metal additive manufacturing. These initiatives enable the production of lightweight, durable components critical for bridging generative AI designs with real-world robotic applications.

The Research & Development Tax Credit

The now permanent Research and Development (R&D) Tax Credit is available for companies developing new or improved products, processes and/or software.

3D printing can help boost a company’s R&D Tax Credits. Wages for technical employees creating, testing and revising 3D printed prototypes can be included as a percentage of eligible time spent for the R&D Tax Credit. Similarly, when used as a method of improving a process, time spent integrating 3D printing hardware and software counts as an eligible activity. Lastly, when used for modeling and preproduction, the costs of filaments consumed during the development process may also be recovered.

Whether it is used for creating and testing prototypes or for final production, 3D printing is a great indicator that R&D Credit-eligible activities are taking place. Companies implementing this technology at any point should consider taking advantage of R&D Tax Credits.

Conclusion

Hoping to be at the forefront of generative physical AI, NVIDIA is building upon groundbreaking technologies such as Jetson, Blackwell, and Isaac to bridge the gap between virtual design and real-world applications. Its efforts are paving the way for the creation of humanoid robots that can unlock vast opportunities for innovation including new forms of collaboration between humans and machines. 3D printing can help accelerate this robotic revolution by enabling rapid iteration of designs, reducing manufacturing costs, and facilitating the production of highly customized components tailored to advanced robotic systems.