AMGPT is an experimental large language model that can help metal 3D printer operators.

AI is becoming a normal thing in life these days, at least for me. It’s not at all surprising that it is being applied to 3D print technology as well, and we’re seeing more examples of this every week.

A recent research paper described an experimental system called “AMGPT”, which provides an ability to answer questions about metal 3D printing. The interesting part is how they put it all together.

We commonly used AI tools like ChatGPT, Claude or similar tools like a black box: you type a question, it gives you an answer. While it looks simple from the outside, it’s actually quite complex behind the scenes.

The research paper explains how they constructed the AMGPT system using available tools and input data, including how they tuned the system to provide the best results.

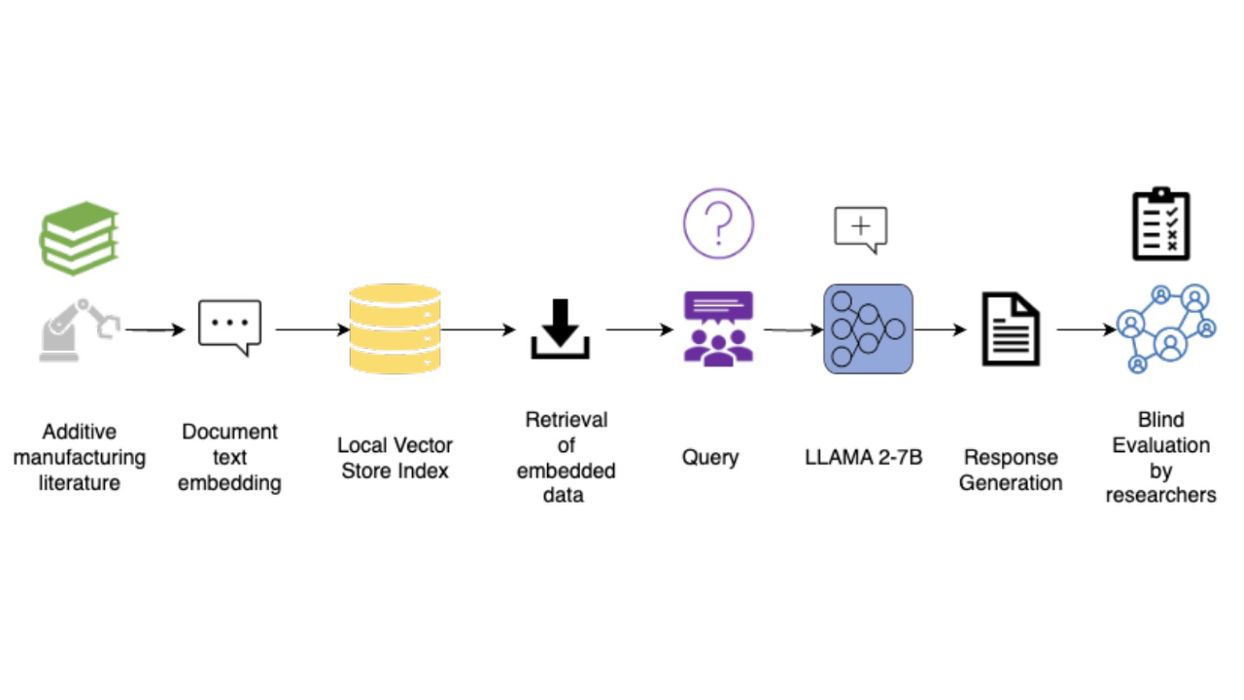

Their approach was to scoop up around 50 different papers and texts discussing knowledge of metal 3D printing and train a LLM on that material. At top you can see the base workflow they undertook, starting with the material and ending with researchers testing the results of queries for accuracy.

They had to encode all the knowledge first, which is a complex step. They had to chunk up the material and transform it all into digital vectors for processing.

Once encoded in the LLM, they had to tune the results. One factor they tested was the “temperature”, or a parameter that affects the randomness and creativity of the responses. If too high, then you get more diverse responses, but possibly errors, too. Low temperatures provide focused output, but not particularly creative.

They also played with the “System Prompt”. This is a hidden prompt that is injected before any user query, and normally sets the stage for the output and provides any constraints. Some examples they used:

”You are an AI assistant that answers questions in a friendly manner, based on the given source.”

Or:

“You are an expert on additive manufacturing that answers questions in a friendly manner, based on the given source documents.”

Or:

“You are a science and technology populariser who seeks to explain concepts in a simple manner.”

You can see how these may affect the results from the LLM, and how it is important to tune them.

Did the system work? Apparently so:

“We characterized RAG methods by varying inference parameters to produce a reliable metal additive manufacturing expert LLM that can be queried through a user interface. Due to the nature of the corpus-referencing task in constraining a response to be consistent with an external factual base, minimizing the topk and temperature parameters yielded the most relevant results. Despite having less than 0.5% of the parameters that GPT-4 has, our RAG system is able to maintain high fidelity and accuracy of answers.”

Having proven this can work, it seems it is possible for others to produce similar LLMs for other aspects of 3D printing. Imagine, for example, a LLM trained on desktop 3D printers. It would be able to answer many troubleshooting questions and could be incredibly helpful.

I’m wondering why none of the desktop 3D printer companies have built such a thing, as it would immensely enhance their support capabilities, reduce customer calls and improve customer experiences.

Via ArXiv