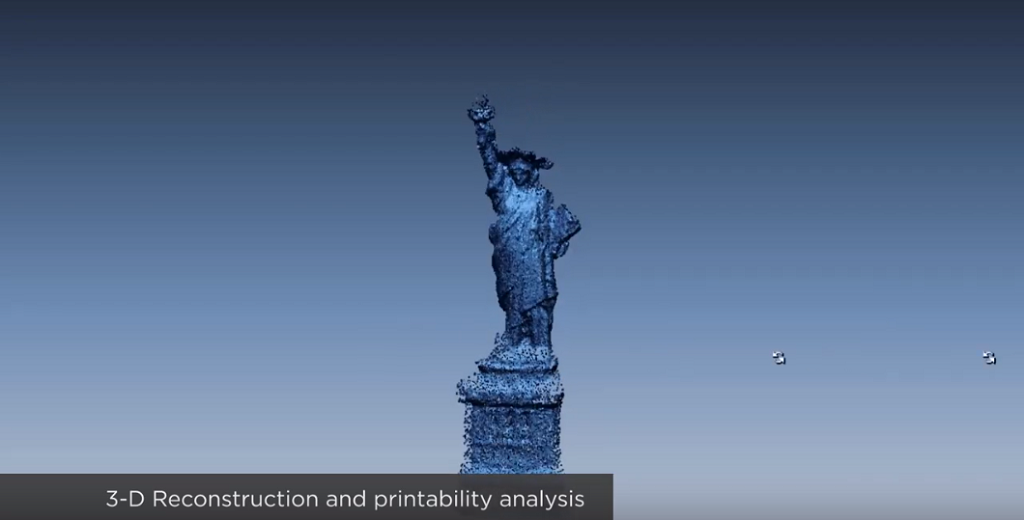

![A Statue of Liberty model being populated in Source Form [Image: Virginia Tech via YouTube]](https://fabbaloo.com/wp-content/uploads/2020/05/SourceFormmodel_img_5eb0933302630.png)

A new project from Virginia Tech is perhaps the closest we’ve yet come to 3D printing as a Star Trek Replicator.

“Tea. Earl grey. Hot.” And there it is. That’s the real (well, “real”) Replicator: it takes a quick, clear command and brings it to the physical world. Captain Picard gets his perfect cup of tea nearly instantly just by telling the system what he wants, and viewers have for years held this as a vision of the on-demand future.

That was, for a long time, the far-fetched promise of the 3D printer. It’s still solidly sci-fi, as the consumer 3D printing crash some years ago underscored.

But perhaps it won’t stay in the realms of the starship Enterprise.

An interdisciplinary team from Virginia Tech has brought together artists and engineers to create a new 3D printer that creates prints from crowdsourced data.

“In some regards, this project is our step towards the star trek ‘replicator’ vision of a user being able to get any part fabricated with a simple search. Instead of requiring a 3d model of the object; the model is created on-demand from the crowd sourced images,” Christopher B. Williams, Ph.D., Electro Mechanical Corporation Senior Faculty Fellow; J. R. Jones Faculty Fellow; Professor, Department of Mechanical Engineering tells us.

Crowdsourcing Data

The project is called Source Form.

Its abstract explains:

“Source Form is a stand-alone device capable of collecting crowdsourced images of a user-defined object, stitching together available visual data (e.g., photos tagged with search term) through photogrammetry, creating watertight models from the resulting point cloud and 3D printing a physical form. This device works completely independent of subjective user input resulting in two possible outcomes:

1. Produce iterative versions of a specific object (e.g., the Statue of Liberty) increasing in detail and accuracy over time as the collective dataset (e.g., uploaded images of the statue) grows.

2. Produce democratized versions of common objects (e.g., an apple) by aggregating a spectrum of tagged image results.”

Having trouble visualizing? They’ve released a short video demo:

Instead of asking for a nice cuppa, a user might input “Statue of Liberty” — in this case, it’s an easily and immediately identifiable landmark. Source Form searches through photos to compile a full, watertight model that is then sent to the system’s SLA 3D printer and brought into reality.

And it gets better.

Not necessarily the news — the system literally gets better.

As with any machine learning, more data means better results. Inputting the same search terms say six months later will result in a better mode, because more data will be available.

More people will have gone to the Statue of Liberty over that half-year period, and will have taken and tagged more publicly available images.

The abstract continues:

“As people continue to take pictures of the monument and upload them to social media, blogs and photo sharing sites, the database of images grows in quantity and quality. Because Source Form gathers a new dataset with each print, the resulting forms will always be evolving. The collection of prints the machine produces over time are cataloged and displayed in linear groupings, providing viewers an opportunity to see growth and change in physical space.”

Democratizing Data

![The Source Form setup [Image: Virginia Tech]](https://fabbaloo.com/wp-content/uploads/2020/05/ICATSourceform_img_5eb0933364fc2.jpg)

But what about when the search term is more general?

Searching for “apple”, for example, can bring about varied results. While your first thought may be Red Delicious, someone else may be thinking Granny Smith or even Crab Apple — or the unnamed red apple that brought about Snow White’s curse-coma.

So what gets printed?

Popularity rules:

“In addition to rendering change over time, a snapshot of a more common object’s web perception could be created. For example, when an image search for ‘apple’ is performed, the results are a spectrum of condition and species from rotting crab apples to gleaming Granny-Smith’s. Source Form aggregates all of these images into one model and outputs the collective web presence of an ‘apple’. Characteristics of the model are guided by the frequency and order in response to the image web search. The resulting democratized forms are emblematic of the web’s collective and popular perceptions,” the abstract notes.

Why Crowdsource A 3D Print?

The team doesn’t specifically lay out application areas for Source Form, but the general objective allows for quickly and easily sourcing a fully 3D printable model.

If nothing else, it’s a genuinely neat demonstrator of the intersection of several disciplines. Art, machine learning, 3D printing, 3D modeling, engineering — these all come together just as the interdisciplinary team behind the project did in the first place.

The faculty and students who created Source Form hail from several areas of Virginia Tech; full credits include: Sam Blanchard (School of Visual Arts), Chris Williams, Viswanath Meenakshisundaram, Joseph Kubalak (Mechanical Engineering DREAMS Lab), Jia-Bin Huang and Sanket Lokegaonkar (Electrical & Computer Engineering). The project was primarily supported by Virginia Tech’s Institute for Creativity, Arts and Technology (ICAT), with additional support from the VT School of Visual Arts and the National Science Foundation.

The project is on display this week at ACM SIGGRAPH 2019 Studio in LA.

Via Virginia Tech

A research thesis details the incredibly complex world of volumetric 3D printing. We review the highlights.