Those of you who attempt 3D scans of your friends with inexpensive Kinect-based scanning solutions will definitely have feelings about hairstyles.

We’ve been doing a batch of human 3D scans in the lab and again ran into one of the most problematic issues: 3D scanning hair.

Oh, we definitely appreciate hairstyles of all sorts. It’s just that we don’t like scanning them, particularly if they are complex and “big”. Why? Two reasons.

First, Kinect equipment works by determining the depth (or distance) from the camera of each observed pixel. In the case of “complex” hair, the distance is nearly impossible for the Kinect to determine because its rangefinding beams sometimes pass harmlessly through the hair to the underlying skin. Or not.

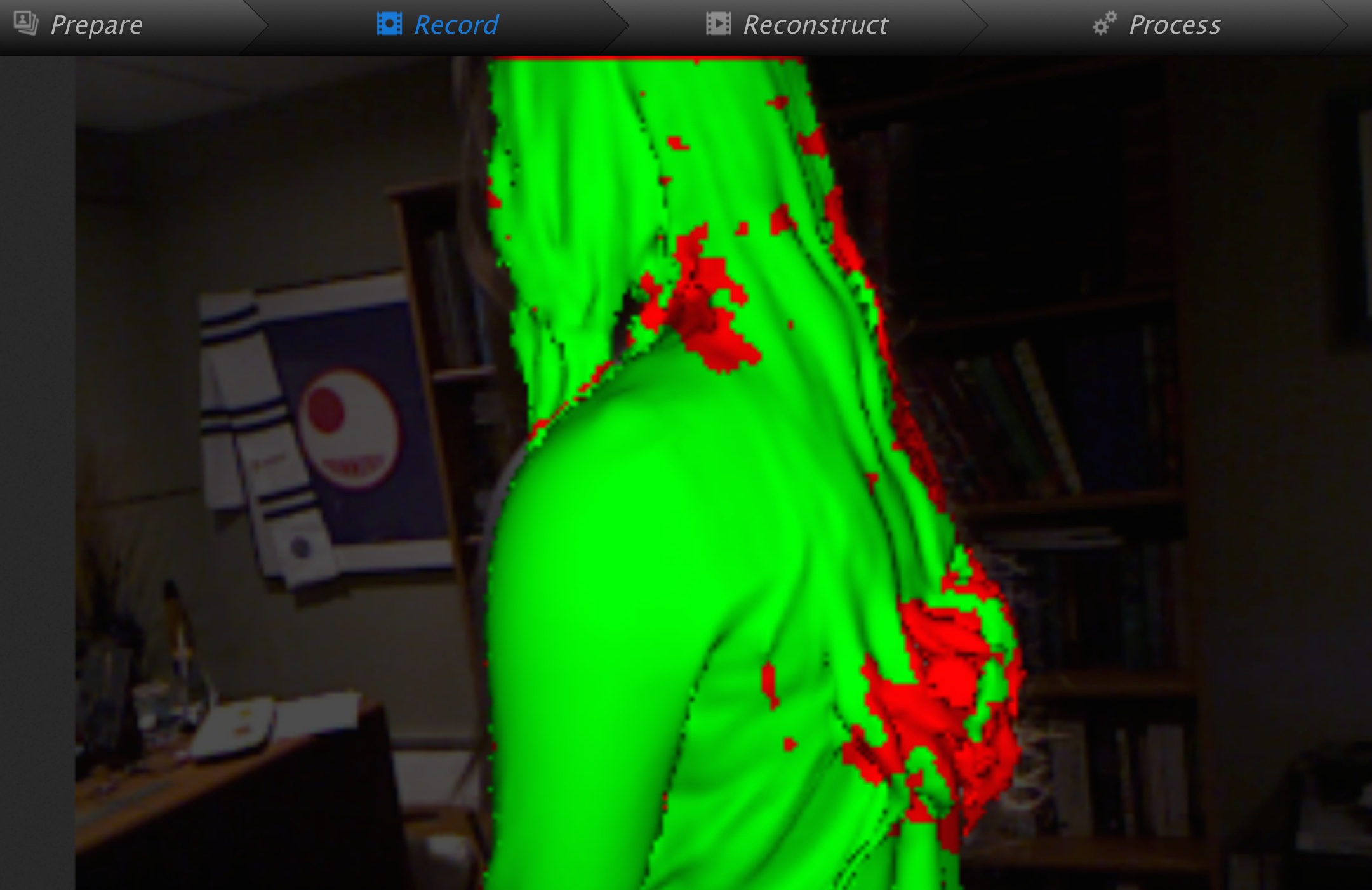

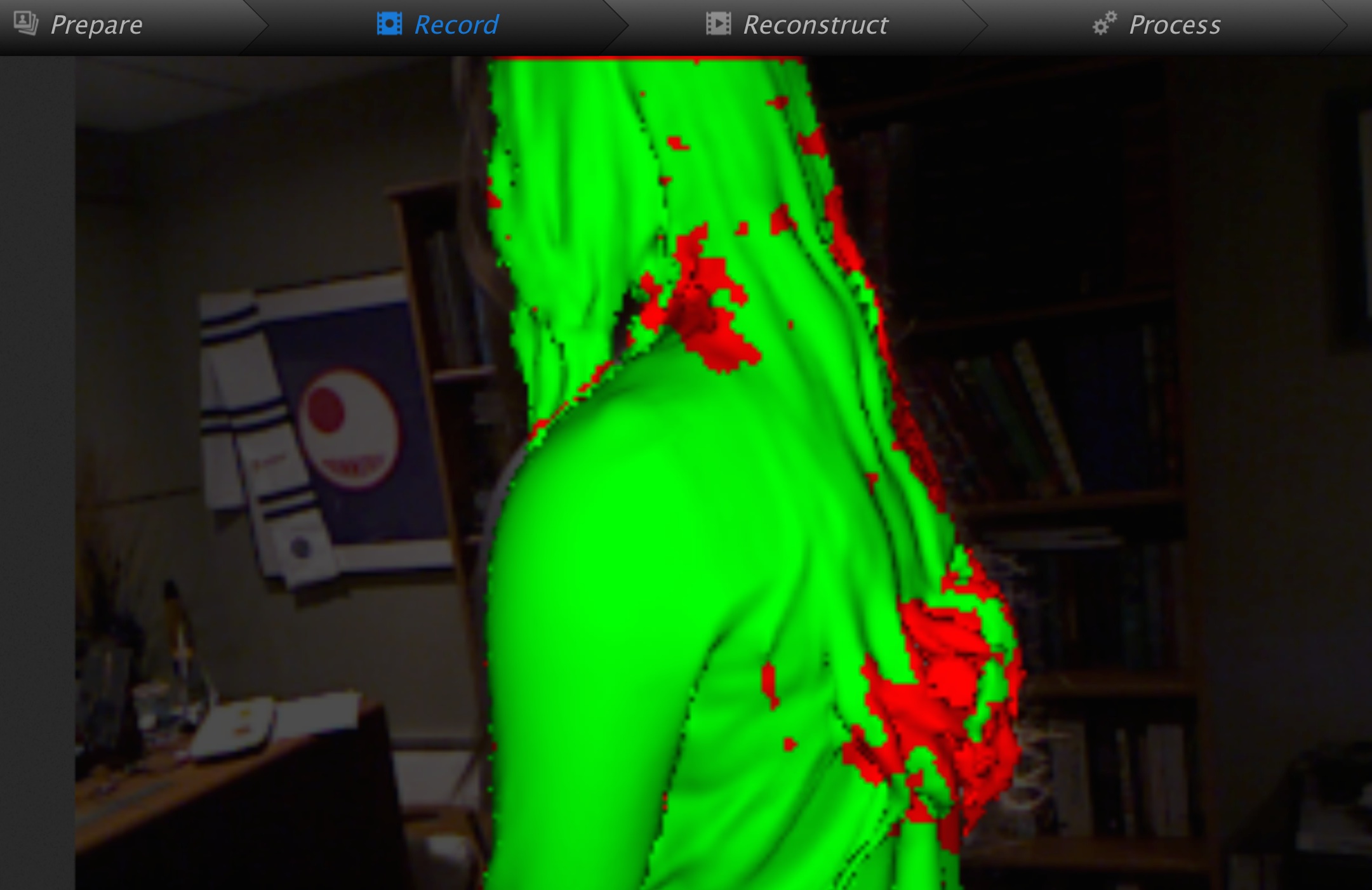

The result is an indeterminate shape, as shown above during our scan. We’re using our favorite 3D scan software, Skanect, which displays green for correctly captured geometry and red for indeterminate areas. As you can see, the long hair of our subject cannot be captured.

That’s one problem, but the second quickly follows. Now you have a rather messy scan to fix up. You’ll have to cut off problem areas or use tools like Meshmixer to smooth out the octopus-like hair interpretations. Even worse, the complexity of hairstyles usually means your 3D model will be complex and large, sometimes enough to defeat 3D printer slicing software.

Our best recommendation: scan only bald subjects. They’ll thank you for the cut.