![MIT’s experimental 3D scanner uses gel sensors [Source: MIT]](https://fabbaloo.com/wp-content/uploads/2020/05/image-asset_img_5eb094d95833d.jpg)

MIT has developed a highly unusual 3D scanning method that relies on touch.

There are plenty of 3D scanning processes available today, including dual or single lasers, LIDAR, structured light and photogrammetry. But it seems that MIT has developed a new approach that’s based on touch.

The key to their project revolves around another technology called “gelsight”. This unusual sensor employs a thin gel layer into which an object is pressed. By using differently colored lights and some machine learning algorithms, the gelsight system is able to capture a 3D scan of the portion of the pressed object.

You can see how sensor this works in this MIT video:

But a cookie-sized sensor is not going to do much in the practical world. That’s where the latest research work has taken place.

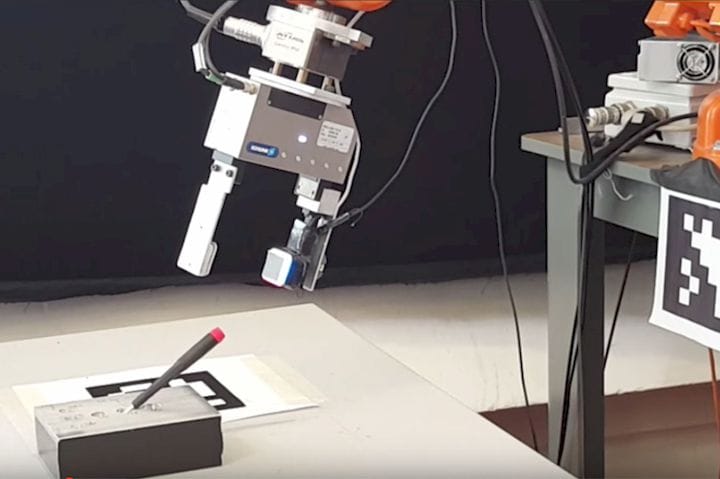

The MIT team attached a gelsight sensor to a Kuka robot arm, enabling the sensor to be moved around an object.

Gel Sensor

They developed sophisticated software to interpret the scanned object’s general shape and then used the robotic arm to press the gelsight sensor into all exposed surfaces of the subject. By incrementally adding the gelsight sensor’s findings, they were able to develop a complete 3D model of the entire subject.

One advantage of the touch approach is that the surface texture is captured to an extraordinary degree. This is an aspect that is sometimes not properly done by other 3D scanning processes, in particular photogrammetry, which often results in bumpy surfaces. Not so when touch sensors directly detect the surface texture.

In terms of technology challenges, combining gelsight sensor readings into a 3D model is a well-understood approach. What’s more interesting is programming the robot to understand how to move the sensor around a given object.

In a sense, the robot has to “pre-scan” the subject before actually scanning it by touch. How did the researchers overcome this challenge?

Recognizing 3D Objects

It turns out they simplified the problem by constraining the types of objects that could be scanned. They explain:

“Wenzhen Yuan, a graduate student in mechanical engineering and first author on the paper from Adelson’s group, used confectionary molds to create 400 groups of silicone objects, with 16 objects per group. In each group, the objects had the same shapes but different degrees of hardness, which Yuan measured using a standard industrial scale.

Then she pressed a GelSight sensor against each object manually and recorded how the contact pattern changed over time, essentially producing a short movie for each object. To both standardize the data format and keep the size of the data manageable, she extracted five frames from each movie, evenly spaced in time, which described the deformation of the object that was pressed.”

This dataset was used as input to neural network algorithms that could then provide a rough interpretation of a subject and generate the appropriate motion for the touch scan. However, there are plenty of limitations here, not the least of which is that the robot does not understand object properties like flexibility, weight or fragility.

Nevertheless, this is a very interesting step forward that could result in an ability to capture very detailed surface scans of arbitrary objects — if they find a way to make a more general motion algorithm.

Via MIT Department Of Mechanical Engineering

A research thesis details the incredibly complex world of volumetric 3D printing. We review the highlights.