![Searching for 3D models can be extremely tedious [Source: Thingiverse]](https://fabbaloo.com/wp-content/uploads/2020/05/image-asset_img_5eb098976dcf6.jpg)

Ever since the appearance of personal 3D printing, there’s been an awful problem: searching for 3D models.

In fact, there is no shortage of 3D models. The largest public repository of printable 3D models has been MakerBot’s Thingiverse, now with I believe, over 3 million models. There are other similar repositories, which we detailed in our List of Thingiverse Alternatives post.

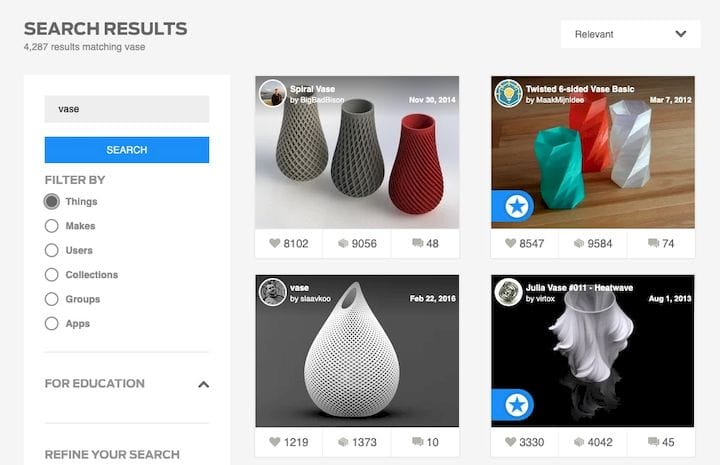

When using any of these services the only practical way to find anything is to perform a text-based search. For example, if you were looking for a vase model, you would search for “vase”. In Thingiverse this results in an viewable 4,287 results as of this writing. (See image at top.) A similar effect also occurs in the other repositories.

Also note that many of the 3D model repositories you encounter on the Internet are actually intended for visual assets, not 3D printable items. Thus it’s even more challenging to find printable 3D models.

The results from this type of search show many objects, with usually none of them being anything close to what you actually wanted. Aha, you say, just use more search terms! Well, that doesn’t seem to work, because those who posted the objects did not tag them properly or include the specific words you wanted to search for in their description.

You are relegated to simply scrolling through hundreds or even thousands of pictures, hoping to see something that is close to your desired item. And even then you may not see what you want, because the posters do not necessarily use images that you would recognize.

We’re just not searching in the best way. Words only indirectly relate to the geometry of an object. It’s almost like searching for text but only being able to specify individual letters. Let’s find a document on elephants, for example, but you can only search with “ph”.

Some services attempt to overcome this problem by being a bit more visual in their search processing. For example, Yeggi, a meta-repository that tracks several actual repositories, shows available images, but still relies strongly on input text. Another now-defunct operation, Yobi3D, offered not only images of the item, but also a rotatable 3D view of each search result.

Google has an advanced image search in which you can upload a sample image and it attempts to locate similar images across the web. This works reasonably well, but it depends on you having a base image to search with.

If someone were to design a geometry-driven 3D model search engine that operated in a similar manner, it would face a similar challenge: do you have a 3D model of something close to what you’re looking for? I’d suggest you probably don’t.

It may be that what is needed is a voice-driven system that can interpret basic descriptions to produce a rough 3D model that could be used for subsequent searches. For example:

“Search for a vase that is thinner at the top than the bottom, and has a wavy surface.”

Simple descriptions such as these might be able to be generated using a restricted vocabulary. Then that geometry might be used to find similarly shaped geometries in a repository.

Now that would be something to use! However, I have never heard of anyone even contemplating development of such a thing. Is anyone interested?

No one seems to offer collaborative 3D printing modes on dual extrusion devices. We explain why this is the case.