I’m reading a research paper on a very unusual method of 3D scanning solid objects.

The new method, developed by researchers at several different institutions, proposes to use water displacement as the approach for 3D scans.

It’s based on the ancient Archidemes principle, which states that the weight of water displaced equals the weight of the object submerged. That’s not quite how this works, however.

What they’re using here is the idea that the volume of the water displaced equals the volume of the object submerged. Here’s how it works:

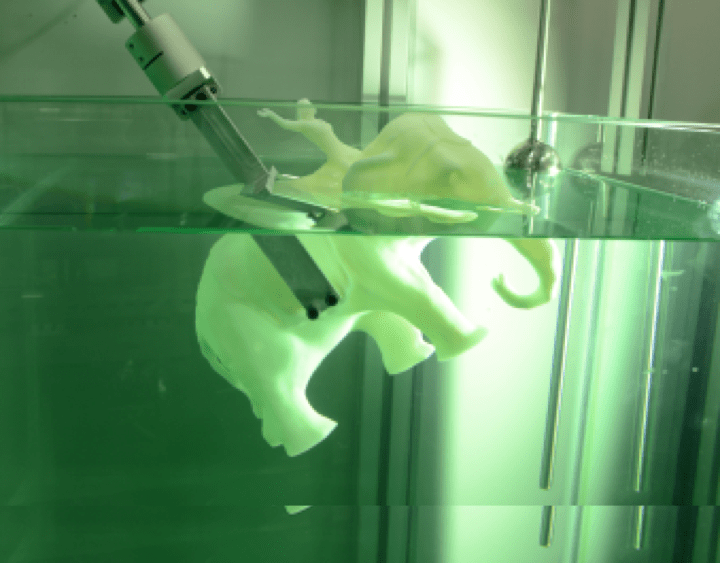

A target object is gradually submerged forcefully into a water chamber. The surface level of the water is measured periodically, and it rises as the volume of object is immersed.

By doing the immersion step by step, the object is sffectively “sliced” into layers, where the volume of each layer can be precisely measured. This works reasonably well as the liquid can quickly access hidden portions of the object that might have been missed by other optical or touch-based 3D scanning approaches.

But then, all you get is a single set of volume measurements for the layers – how does that transform into a 3D model? There are further steps to take.

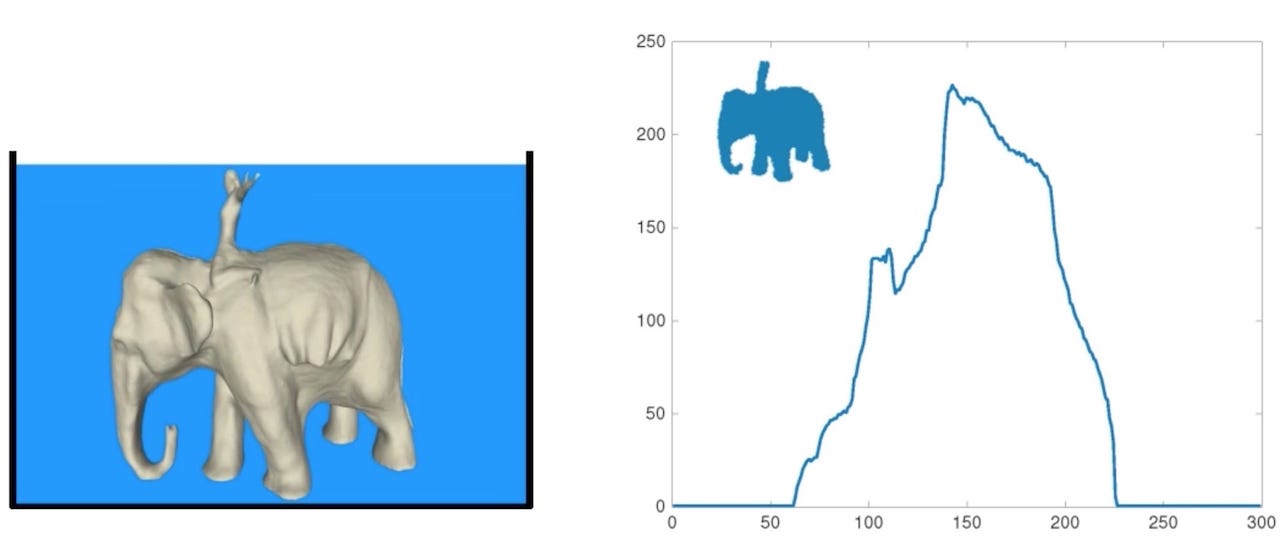

The object is then re-oriented and dipped progressively once again. In a sense, this is like slicing the object on a different plane, thus the “volume profile” is somewhat different than the first one.

This dipping process is repeated numerous times, resulting in a collection of volume profiles from various orientations. The researchers then composed software that converts these “views” into a coherent 3D model, in much the same way that conventional 3D scanners convert their different views into a 3D model.

This process is quite unique and has the major advantage of being perhaps the only technique that can be used to capture hidden interior details on a 3D scan, aside from disassembling an object and scanning it.

It is likely also quite scalable. Imagine a house-sized vat with a crane dipping, say, an automobile. The same principles would hold and a 3D model could be captured. I’m not sure this would be financially feasible, but certainly could technically be done.

But there are a number of challenges this process must overcome.

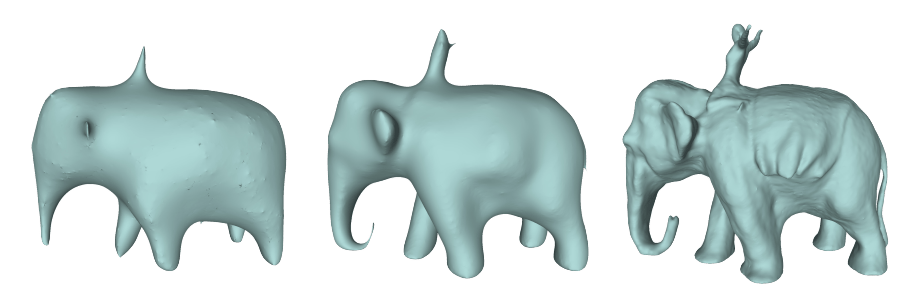

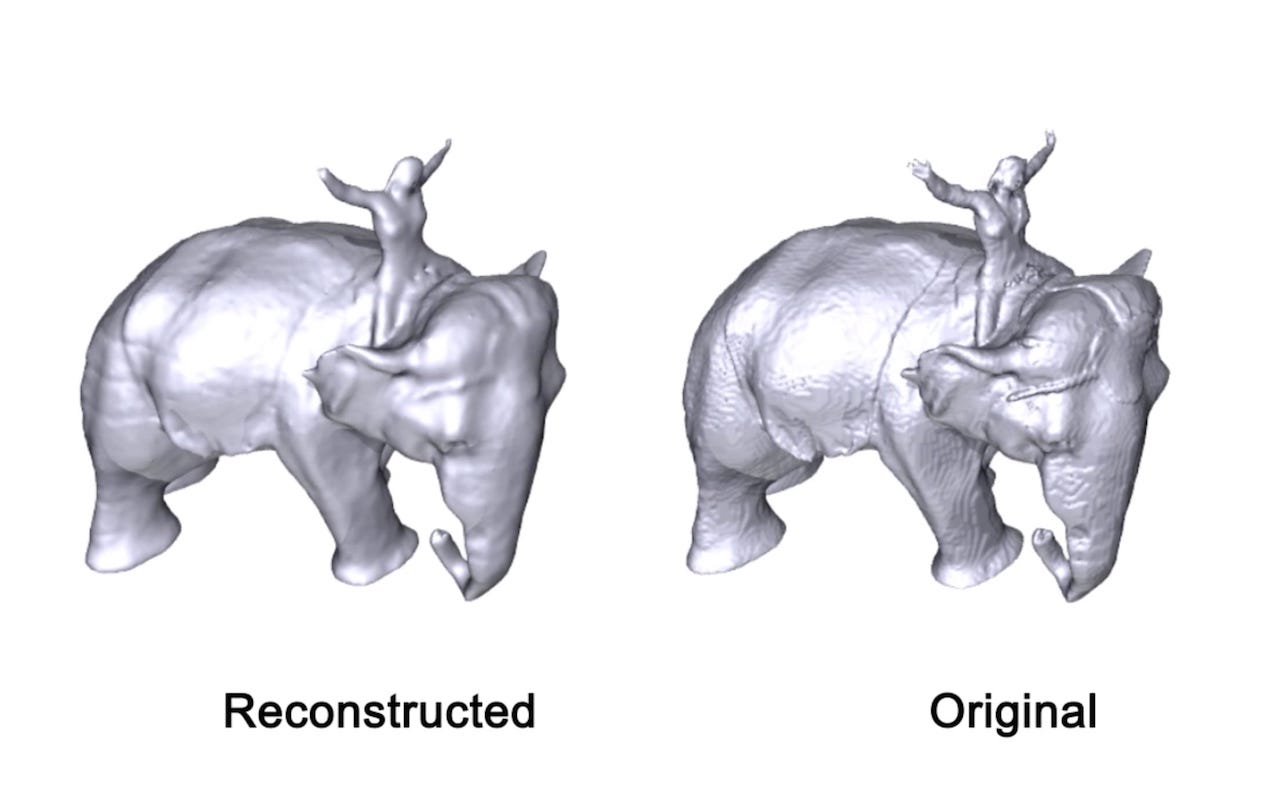

As you can see here, the resolution is not precise. While the overall shape is indeed captured, fine details are not. This makes the process unusable for capturing the interior details of some parts, such as aerospace components with highly detailed interior structures.

Another challenge will be speed. As you might imagine, the immersion process will be slow to avoid waves and splashes.

Worse, the immersion must take place a large number of times to capture sufficient object geometry. Here we see that it has taken literally 1,000 “dips” to capture this elephant model in rough form. That will not be quick by any means.

It’s also possible that some originals should not be immersed in water. Imagine a priceless historical artifact that requires 3D scanning. Would we be dropping it water 1,000 times? Probably not. The same may be true for some machine components.

Finally, there are no doubt some geometries where this process would not work. Imagine a lightweight object with hidden interior sparse structures; they could not be “scanned” with the dip technique as the water might not be able to encounter them, and the resulting scan would appear solid.

Those constraints aside, it is entirely possible this may form a new type of niche 3D scanning function that would be usable on a subset of object types.

However, at this stage it’s only research and we’ll have to wait for an entrepreneur to grab and run with it.