A paper from Oxford and Microsoft researchers details an experimental workflow enabling capture of 3D models using only a smartphone’s camera.

Researchers Peter Ondru ́sˇka, Pushmeet Kohli and Shahram Izadi developed what they call the “first pipeline for real-time volumetric surface reconstruction and dense 6DoF camera tracking running purely on standard, off-the-shelf mobile phones”.

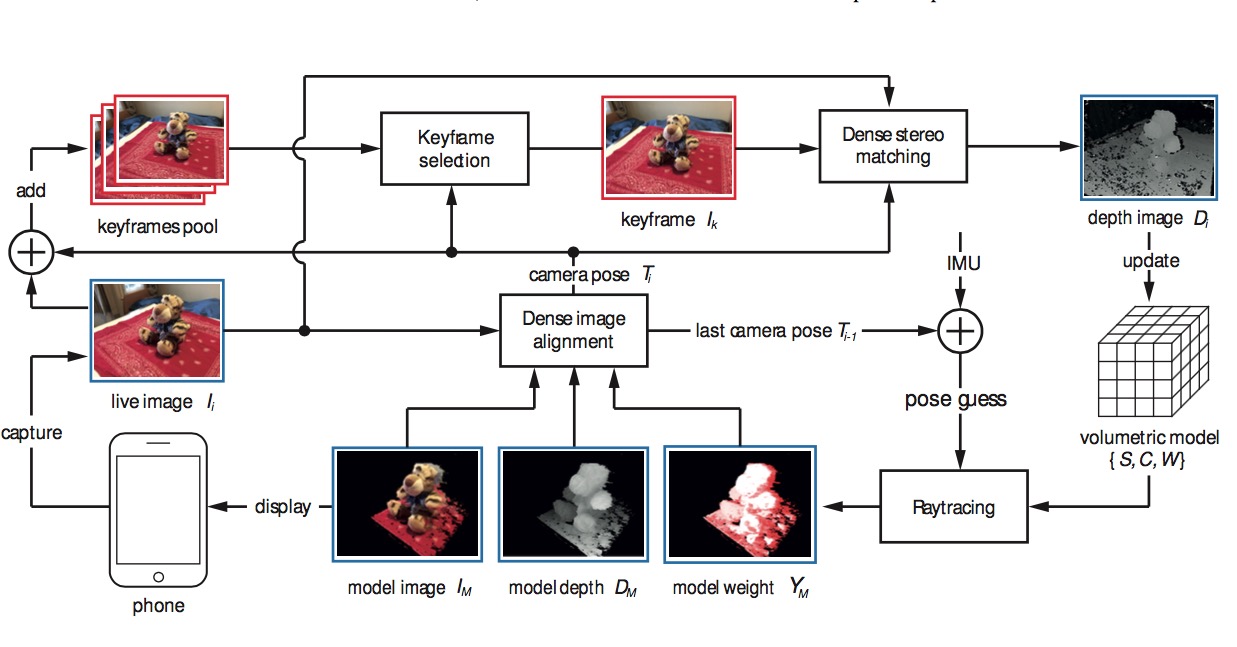

The system relies on “dense 6DoF tracking”, meaning six degrees of freedom. Evidently leveraging the smartphone’s accelerometer and other sensors, they’ve been able to carefully track the phone’s movements in space and relate those movements to optical images captured during the movements. Software then converts the captured data into 3D models.

A key requirement recognized by the researchers is “real time feedback”. We’ve used systems in the past in which the resulting 3D model is not displayed until after the scanning takes place. This invariably results in a bad model, because you missed a spot and didn’t realize it until it’s too late. The new workflow does provide real time feedback, eliminating this issue.

An overview of their process is shown here, where you can see the key steps involved in the capture, including selecting images, matching and aligning points and developing the 3D model.

Systems such as this must deal with a wide variety of error conditions, as the camera, environment and even operator are quite unpredictable, and handling these must form part of the workflow.

If this approach is perfected, it may eventually see its way into smartphones as an app. If that happens, then we could see a very rapid increase in the number of 3D scans available for printing. However, it’s likely the scans captured by the new workflow will require some post processing to properly prepare them for 3D printing.

Via Oxford (PDF) (Hat tip to Will)